1. ISSUE SUMMARY

1.1 Evidence that Climate Models Produce Far Too Much Warming

The issue I will address is appropriately introduced by a quote from a leading cloud expert, Dr. Robert Cess, made over ten years ago:

“the [climate models] may be agreeing now simply because they’re all tending to do the same thing wrong. It’s not clear to me that we have clouds right by any stretch of the imagination.”

– Dr. Robert Cess, quoted in Science (May 16, 1997, p. 1040)

Nowhere in climate models is there greater potential for error than in the treatment of clouds. This is especially true of low clouds, which cool the climate system, and which the IPCC has admitted are the largest source of uncertainty in global warming projections (IPCC, 2007).

Research published by us since the IPCC 2007 4th Assessment Report (IPCC AR4) suggests that a major problem exists with most, if not all, of the IPCC models’ cloud parameterizations. Cloud parameterizations are greatly simplified methods for creating clouds in climate models. Their simplicity is necessary since the processes controlling clouds are too complex to include in climate models, and yet those same parameterizations are critical to model projections of future global temperatures and climate since clouds determine how much sunlight is allowed into the climate system. Significantly, all 21 IPCC climate models today decrease global average cloud cover in response to any warming influence, such as that from anthropogenic carbon dioxide emissions, thus amplifying the small, direct warming effect of more CO2.

In stark contrast, though, new analyses of our latest and best NASA satellite data suggest that the real climate system behaves in exactly the opposite manner. This error, by itself, could mean that future warming projected by these models has been overstated by anywhere from a factor of 2 to 6.

How could such a serious error be made by so many climate experts? In a nutshell, when previous researchers have looked at how clouds and temperature have varied together in the real climate system, they have assumed that the observed temperature changes caused the observed cloud changes – but not the other way around.

As I will demonstrate, by assuming causation in only one direction they have biased their interpretation of cloud behavior in the direction of positive feedback (that is, high climate sensitivity). The existence of the problem was first published by us 1 November, 2008 in Journal of Climate (Spencer and Braswell, 2008). It supported our previously published research which showed that weather systems in the tropics also behave in the opposite manner as do climate models (Spencer et al., 2007), that is, they reduce any warming influence rather than magnify it.

My claim on the direction of causation is more recently supported by evidence that one can distinguish cause from effect under certain conditions, in both satellite observations of the real climate system, and in climate models themselves. The result is that true negative feedback in the climate system has been obscured by the dominating influence of natural cloud changes causing temperature changes, which has produced the illusion of a sensitive climate system.

1.2 Natural Cloud Variations Might Have Caused “Global Warming”

If feedbacks in the climate system are indeed negative rather than positive, not only does this mean anthropogenic global warming might well be lost in the noise of natural climate variability, it also means that CO2 emissions have been insufficient to cause most of the warming seen in the past 50 years or so. Just as researchers have been misled about climate sensitivity by ignoring clouds-causing-temperature change, they have also neglected natural cloud variations as a potential source of climate change itself.

While the IPCC claims they can only explain late 20th Century warming when they include anthropogenic CO2 emissions in the models, this is mostly because sufficiently accurate long-term global cloud observations simply do not exist with which one might look for natural sources of climate change. A persistent change of only 1% or 2% in global-average low cloud cover would be sufficient to cause global warming – or cooling. Our ability to measure long-term cloud changes to this level of precision has existed only since the launch of NASA’s Terra satellite in 2000, and so it is not possible to directly determine whether there are natural cloud changes that might have caused most of the climate variability seen over the last 50 to 100 years.

But even though such long-term observations do not exist, one could instead study known natural climate indices such as the Pacific Decadal Oscillation (PDO, Mantua et al., 1997) during that 10 year period to look for evidence that known natural modes of climate variability modulate global average cloud cover. This kind of research should be required before one even begins to discuss ruling out natural climate variability as a source of climate change. Unfortunately, this type of research has never been performed by anyone.

The failure to sufficiently investigate natural, internal modes of climate variability by the climate research and modeling community is a major failing of the IPCC and the CCSP processes. Under the Federal Information Quality Act, the U.S. science process must be held to a higher standard of objectivity and utility.

The discussion below demonstrates why EPA cannot use either the IPCC or CCSP conclusions as a basis for its Endangerment Finding since both depend on the same flawed models.

2. SPECIFIC ERRORS IN THE EF/TSD

I will be addressing the following endangerment finding (EF) and technical support document (TSD) statements, specifically challenging the portions in bold italics, below.

Endangerment Finding:

EF-18896.2: Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations. Global observed temperatures over the last century can be reproduced only when model simulations include both natural and anthropogenic forcings, that is, simulations that remove anthropogenic forcings are unable to reproduce observed temperature changes. Thus, most of the warming cannot be explained by natural variability, such as variations in solar activity.

TSD Executive Summary:

Observed Effects Associated with Global Elevated Concentrations of GHGs:

[OE 2] The global average net effect of the increase in atmospheric GHG concentrations, plus other human activities (e.g., land use change and aerosol emissions), on the global energy balance since 1750 has been one of warming. This total net heating effect, referred to as forcing, is estimated to be +1.6 (+0.6 to +2.4) Watts per square meter (W/m2), with much of the range surrounding this estimate due to uncertainties about the cooling and warming effects of aerosols. The combined radiative forcing due to the cumulative (i.e., 1750 to 2005) increase in atmospheric concentrations of CO2, CH4, and N2O is estimated to be +2.30 (+2.07 to +2.53) W/m2. The rate of increase in positive radiative forcing due to these three GHGs during the industrial era is very likely to have been unprecedented in more than 10,000 years.

[OE 4] Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic GHG concentrations. Climate model simulations suggest natural forcing alone (e.g., changes in solar irradiance) cannot explain the observed warming.

Projections of Future Climate Change with Continued Increases in Elevated GHG Concentrations:

[PF 2] Future warming over the course of the 21st century, even under scenarios of low emissions growth, is very likely to be greater than observed warming over the past century.

[PF 3] All of the U.S. is very likely to warm during this century, and most areas of the U.S. are expected to warm by more than the global average.

2.1 Comments

What follows is evidence against the familiar IPCC AR4 claim, which also appears in both the EF and TSD:

“Most of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations.”

As we will see, this claim is premature at best. I begin with a discussion of feedbacks (which determine climate sensitivity) because the IPCC’s belief in a sensitive climate system is central to their claim that global warming is mostly anthropogenic, and not natural.

2.1.1 The Importance of Climate Sensitivity to Demonstrating Causation in Global Warming

The central issue of causation in global warming is closely related to ‘climate sensitivity’, which can be defined as the amount of warming the Earth experiences in response to a radiative forcing (global average imbalance in sunlight gained versus thermally emitted infrared radiation lost).

If climate sensitivity is relatively high, as the IPCC claims, then I agree that anthropogenic greenhouse gas emissions might well be the main reason for most of the warming experienced in the last 50 years. This is because the small amount of radiative forcing from anthropogenic greenhouse gases would be sufficient to explain past warming if that small warming is amplified by positive feedback, which is what produces high climate sensitivity.

But if climate sensitivity is low, then anthropogenic emissions would be too weak to cause substantial warming. Some stronger, natural mechanism would need to be mostly responsible for the warming we have experienced. Low climate sensitivity would additionally mean that any source of anthropogenic forcings (greenhouse gases, aerosols, etc.) would not substantially affect climate, and therefore a reduction in emissions would have little effect on climate.

This is partly why the IPCC is not motivated to find natural sources of climate change. If the climate system is quite sensitive, then the extra carbon dioxide in the atmosphere alone is sufficient to explain past warming.

So, what determines feedbacks, and thus climate sensitivity? Simply put, climate sensitivity depends upon whether clouds (and other elements of the climate system) respond to the small amount of warming caused by the 1+ W/m2 radiative forcing from anthropogenic greenhouse gases by either amplifying it through ‘positive feedbacks’, or by reducing it through ‘negative feedbacks’. Depending upon climate sensitivity, the long-term warming from increasing atmospheric greenhouse gas concentrations could theoretically be anywhere from unmeasurable to catastrophic. Climate sensitivity thus becomes the main determinant of the level of future anthropogenic global warming, and how it then compares in magnitude to natural sources of climate variability.

The IPCC has admitted that feedbacks from low clouds (which have a large impact on how much sunlight reaches the Earth’s surface) are the most uncertain of all the feedbacks that determine climate sensitivity, and therefore constitute the largest source of uncertainty in projections of future global warming (IPCC, 2007). Indeed, Trenberth & Fasullo (2009) found that most of the differences between the IPCC climate models’ warming projections can be traced to how they change low and middle cloud cover with warming. Recent work by Caldwell and Bretherton (2009) with a sophisticated cloud resolving model has produced results supporting negative, not positive, low cloud feedback.

I now believe that the low cloud feedback issue is even more serious than the IPCC has admitted. Next we will examine why I believe there has been so much uncertainty over cloud feedback in the climate system.

2.1.2 Why Previous Observational Estimates of Climate Sensitivity have been Inconclusive

Previous estimates of climate sensitivity from observational data have led to confusing and inconclusive results (Knutti and Hergerl, 2008). The most recently published satellite estimates of climate sensitivity (Forster and Gregory, 2006, hereafter FG06) and IPCC AR4 climate model sensitivity (Forster and Taylor 2006, hereafter FT06) led FT06 to conclude that the satellite-based results could not be trusted. At face value, their best estimate of sensitivity from satellite observations was less sensitive then all of the IPCC models, but they concluded that the uncertainty in the satellite estimate was so large that it was of little value anyway.

But we have determined that the reason researchers have been unable to pin down a reasonable estimate of climate sensitivity is because cause and effect have not been accounted for when measuring the co-variations between cloud cover (or radiative flux) and temperature. We have evidence that the true signature of feedback in the data has been mostly obscured by natural cloud variations forcing temperature variations.

The problem can be illustrated with the following example: If global average cloudiness is observed to decrease in warmer years, this would normally be interpreted as warming causing a cloud decrease, which would be positive feedback, which would mean higher climate sensitivity. But what if the warming was the result of the decrease in cloud coverage, rather than the cause of it?

As demonstrated by Spencer and Braswell (2008) with a simple model of global-average climate, the presence of something as simple as daily random variations in clouds will cause feedbacks diagnosed from measurements of temperature and cloudiness (actually, the radiative imbalance of the Earth) to be biased in the direction of positive feedback (high climate sensitivity). That paper was reviewed by two IPCC experts: Piers Forster, and Isaac Held, who both agreed our paper raised a valid and potentially important issue.

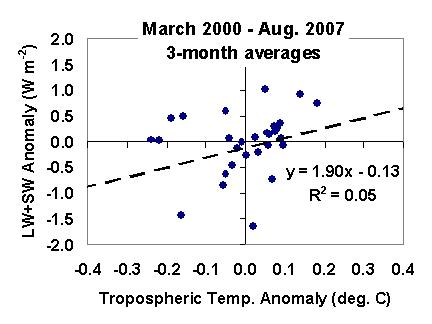

What follows is quantitative evidence that suggests why this mix-up between cause and effect causes the illusion of high climate sensitivity. (The paper describing this evidence is under revision to be resubmitted to Journal of Geophysical Research after we address questions raised by the three reviewers of the paper). The causation issue can be illustrated with the following graph of 7.5 years of recent satellite-measured global ocean average variations in tropospheric temperature versus top-of-atmosphere total radiative flux (‘LW’ is emitted infrared, ‘SW’ is reflected sunlight). Both of these datasets are publicly available.

Fig. 1. Global oceanic 3-month averages of net radiative flux variations (reflected sunlight + thermally emitted infrared) from the CERES radiation budget instrument flying on NASA’s Terra satellite, plotted against corresponding tropospheric temperature variations estimated from channel 5 of the Advanced Microwave Sounding Unit (AMSU) flying on the NOAA-15 satellite.

The slope of the dashed line, fit to the data with statistical ‘regression’, would traditionally be assumed to provide an estimate of feedback, and therefore of climate sensitivity. Regression line slopes are what FG06 used to diagnose feedbacks in satellite data, and what FT06 used to diagnose feedbacks in climate models. The greater the slope of the regression line, the less sensitive the climate system; the shallower the slope of the line, the greater the climate sensitivity. Since a line slope of 3.3 Watts per sq. meter per degree C represents the border between positive and negative feedback, the slope of the line in Fig. 1 (1.9 Watts per sq. meter per deg. C) would, at face value, correspond to moderate positive feedback.

But note the huge amount of scatter in the data. This is an example of why researchers have not trusted previous satellite estimates of feedback. If the data points happened to cluster nicely along a line, then we would have more confidence in the diagnosed feedback. But instead we see data scattered all over. This scatter translates directly into uncertainty in the slope of the line, which means uncertainty in feedback, which means uncertainty in climate sensitivity.

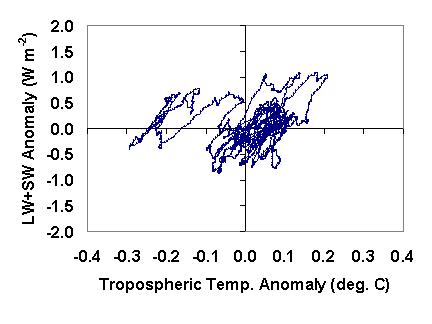

This is the point at which other researchers have stopped in their analysis of the satellite data, concluding that satellite estimates of feedbacks are too uncertain to be of much use. But we have determined that researchers have not dug deep enough in their data analysis. It turns out that the scatter in the data is mostly the result of cloud variations causing temperature variations, which is causation in the opposite direction as feedback. If the data in Fig. 1 are plotted as running averages, rather than independent averages, and the successive data points in time are connected by lines, certain patterns begin to emerge, as is shown in Fig. 2.

Fig. 2. As in Fig 1, but now 3-month averages are computed every day and connected by lines, revealing the time history of how the climate system evolves.

This method of plotting is called phase space analysis, and it can “easily elucidate qualities of (a) system that might not be obvious otherwise” (Wikipedia.com entry on “Phase Space”). What we now see instead of a seemingly random scatter of points is a series of linear striations and looping or spiraling patterns.

A very simple forcing-feedback model widely used in climate studies (e.g. Spencer and Braswell, 2008) can be used to show that the linear features are temperature changes causing cloud-induced radiative changes (that is, feedback); while the looping features are from causation in the opposite direction: cloud variations causing temperature variations.

Significantly, it is the natural cloud variations causing temperature variations that de-correlates the data, leading to a regression line slope biased in the direction of high climate sensitivity (positive feedback) like that seen in Fig. 1.

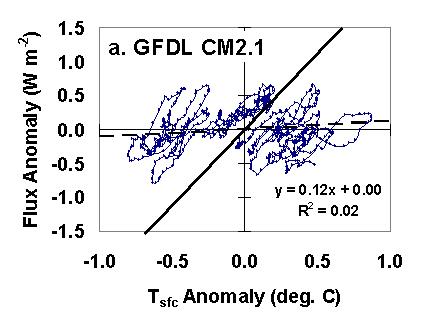

We also find the spiral features and linear features in IPCC climate models tracked by the IPCC, for instance in the GFDL CM2.1 model shown in Fig. 3.

Fig. 3. As in Fig. 2, but for yearly global averages plotted every month from the GFDL CM2.1 climate model. The dashed line is a regression fit to the data; the slope of the solid line represents the model’s long-term feedback in response to anthropogenic radiative forcing from greenhouse gases as diagnosed by FT06.

It is important to note that the linear striations in Fig. 3 are approximately parallel to the ‘true’ long-term feedback as diagnosed for this model by FT06, which is indicated by the solid line. This means that the slope of the short-term linear striations are indeed an indication of the long-term feedback in the model, and therefore of the climate sensitivity of that model. We find obvious striations (‘feedback stripes’) in five of the IPCC climate models, and in all five cases their average slope is very close to the long-term feedback in those models diagnosed by FT06.

While the above analysis might seem a little technical, it is merely a way to quantitatively demonstrate how a mix-up between cause and effect between clouds and temperature can lead to the illusion of a sensitive climate system.

2.1.3 Feedbacks Revealed In Satellite Data

Using this new insight, if we now return to the linear striations seen in the satellite data plotted in Fig. 2, we find their slope to be about 6 Watts per sq. meter per degree C, which would correspond to strongly negative feedback. This is about the same feedback value that Spencer et al. (2007) found for a composite of tropical weather systems over a multi-year period. If this is the feedback operating in the real climate system on the long time scales involved with manmade global warming, then the amount of warming from a doubling of carbon dioxide would only be about 0.6 deg. C, which is at least a factor of 4 less than the IPCC’s best estimate for the future. This casts serious doubt upon the projections of future climate change mentioned in the EF and TSD:

[PF 2] Future warming over the course of the 21stCentury, even under scenarios of low emissions growth, is very likely to be greater than observed warming over the past century.

Similarly, all projections of substantial future regional climate change, such as:

[PF 3] All of the U.S. is very likely to warm during this century, and most areas of the U.S. are expected to warm by more than the global average.

also depend upon high climate sensitivity, and so are similarly called into question.

As far as I know, we are the only research group performing this kind of research. I believe that much greater exploitation our satellite data resources is required to understand what the climate system is trying to tell us about climate sensitivity before we can place any level of confidence in the climate model projections relied upon by the IPCC, and thus by the EPA. The above evidence suggests that previous tests of climate models with observational data have not been sufficiently detailed to validate the feedbacks (climate sensitivity) in those models. At a minimum, the models need to be adjusted to mimic the behavior seen in the real climate system, such as that described above, with methods (e.g. phase space analysis) that can reveal the separate signatures of temperature-forcing-clouds (feedback) from clouds-forcing-temperature (internal radiative forcing).

This is an important point, and it is worth repeating: none of the previous comparisons of climate model output to satellite data have been sufficient to test the climate sensitivity of those models. Unless one accounts in some way for the direction of causation when comparing temperature variations to cloud (or radiative flux) variations, any observational estimates of feedback are likely to be spuriously biased in the direction of high climate sensitivity.

2.1.4 The Potential Role of Natural Cloud variations in Causing Climate Change

The existence of negative feedback in the climate system would have two important consequences: (1) future anthropogenic climate change can be expected to be small, possibly even unmeasurable in the face of natural climate variability; and (2) increasing greenhouse gas concentrations are insufficient to have caused past warming of the climate system. One or more natural sources of climate change would need to be involved.

And this is where natural cloud variations once again enter the picture. While the IPCC only mentions “external” forcings (radiative imbalances) on the climate system due to volcanoes, anthropogenic pollution, and output from the sun, it is also possible for “internal” forcings such as natural cloud changes to cause climate change. This could come about simply through small natural changes in the general circulation of the ocean and atmosphere, for instance from the Pacific Decadal Oscillation (Mantua et al., 1997), or other known (or even unknown) modes of natural climate variability. Spencer and Braswell (2008) showed that even daily random cloud variations over the ocean can lead to substantial decadal time scale variability in ocean temperatures.

It is critical to understand that the IPCC assumes, either explicitly or implicitly, that such natural cloud variations do not occur on the time scales involved in climate change. Quoting EF-18896.2,

“Global observed temperatures over the last century can be reproduced only when model simulations include both natural and anthropogenic forcings, that is, simulations that remove anthropogenic forcings are unable to reproduce observed temperature changes. Thus, most of the warming cannot be explained by natural variability, such as variations in solar activity.”

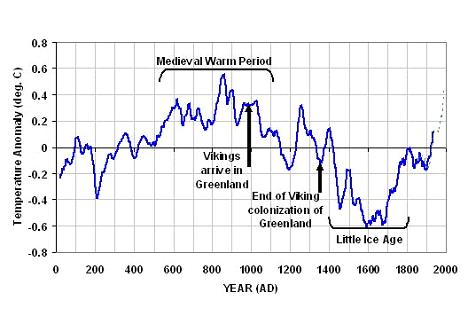

This statement misleadingly implies that the IPCC knows all of the important natural sources of climate change – but they do not. The IPCC specifically ignores any potential internally-generated sources of climate change…what the public would simply call “natural cycles” in climate. While they do not mention it, the IPCC can do this because we do not have global measurements over a long enough period of time to measure small changes in global average cloud cover. All it would take is 1% or 2% fluctuations to cause the kinds of variability in global temperatures exhibited by the following plot (Fig. 4) of global temperature proxy measurements over the last 2,000 years (Loehle, 2007).

Fig. 4. Non-treering proxy reconstruction of global temperature variations over the past 2,000 years (Loehle, 2007).

In fact, if this reconstruction of past temperature variations is anywhere close to being realistic it suggests there is no such thing as “average climate”. As can be seen, substantial temperature changes on 50 to 100 year time scales such as what was observed over the 20th Century have been the rule, not the exception. Periods of steady temperatures are actually quite unusual.

The cause of such natural changes is still unknown to science. Some will argue sunspot activity has modulated global cloud amounts, which is one possibility for external forcing. But another possibility, as alluded to above, is that the climate system causes its own climate change. For instance, we know that “chaos” happens in weather (e.g. Lorenz, 1963), the result of complex nonlinear interactions within, and between, weather systems. But given the much longer (e.g. decadal to centennial) timescales involved in the ocean circulation, there is no reason why chaotic variations can not also occur in climate (e.g. Tsonis et al., 2007).

Therefore, the claim by the IPCC that warming can only be produced by climate models when anthropogenic greenhouse gases are included misleadingly implies that climate does not change naturally. But this is merely an assumption enabled by a lack of data to demonstrate otherwise – not an inference from analysis of existing data.

And again, the easiest way for such changes to occur would be for small changes in atmospheric and oceanic circulation systems to cause small changes in global average cloud cover. For instance, if a small decrease in cloud cover occurs over the ocean, then the ocean will respond by warming. This will, in turn, cause warming and humidifying of oceanic air masses. These warmer and moister air masses then flow across the continents, where even greater warming can result from the natural greenhouse effect of more water vapor contained in the air masses. This was recently demonstrated by Compo and Sardeshmukh (2009), and it demonstrates that “global warming” can indeed be generated naturally. Because of this, there probably is no reliable ‘fingerprint’ of anthropogenic climate change.

Therefore, the TSD statement that “The rate of increase in positive radiative forcing due to these three GHGs during the industrial era is very likely to have been unprecedented in more than 10,000 years”, is nothing more than a statement of faith, and completely ignores the possibility that there have been much larger natural changes in greenhouse gases in the past — specifically, in water vapor…the Earth’s main greenhouse gas.

Again, past changes in atmospheric temperature and water vapor – and thus in the Earth’s greenhouse effect – can be caused by natural changes in oceanic cloud cover modulating the amount of sunlight that is absorbed by the ocean. This possibility is ignored by the IPCC, who simply assume that the climate system was in a perpetual state of radiative energy balance until humans came along and upset that balance.

3. FINAL REMARKS

As we have seen, there is considerable evidence – both theoretical and observational — to distrust the IPCC’s claim that global warming is mostly anthropogenic in origin. The work described above — some published in the peer reviewed scientific literature by us and by others, some in the process of being published — strongly suggests the IPCC AR4 climate models produce too much global warming, possibly by a wide margin.

As discussed above, the most important reason why climate models are too sensitive is, in my view, due to the neglect of the effect that natural cloud changes in the climate system have on (1) climate sensitivity estimates, and on (2) past climate change, such as the global-average warming which occurred in the 20th Century.

The failure to investigate all natural processes by the climate research and modeling communities is a major failing of the IPCC and the CCSP processes. Under the Federal Information Quality Act, the U.S. science process must be held to higher standards of objectivity and utility. Clearly, there is as yet little scientific basis for making an “Endangerment Finding” related to carbon dioxide.

REFERENCES CITED

Caldwell, P., and C. S. Bretherton, 2009. Response of a subtropical stratocumulus-capped mixed layer to climate and aerosol changes. Journal of Climate, 22, 20-38.

Compo, G.P., and P. D. Sardeshmukh, 2009. Oceanic influences on recent continental warming, Climate Dynamics, 32, 333-342.

Forster, P. M., and J. M. Gregory, 2006. The climate sensitivity and its components diagnosed from Earth Radiation Budget data, J. Climate, 19, 39-52.

Forster, P.M., and K.E. Taylor, 2006. Climate forcings and climate sensitivities diagnosed from coupled climate model integrations, J. Climate, 19, 6181-6194.

Intergovernmental Panel on Climate Change, 2007. Climate Change 2007: The Physical Science Basis, report, 996 pp., Cambridge University Press, New York City.

Knutti, R., and G. C. Hegerl, 2008. The equilibrium sensitivity of the Earth’s temperature to radiation changes,” Nature Geoscience, 1, 735-743.

Loehle, 2007. A 2,000 year global temperature reconstruction on non-treering proxy data. Energy & Environment, 18, 1049-1058.

Lorenz, E.N., 1963: Deterministic non-periodic flow, Journal of the Atmospheric Sciences, 20, 130–141.

Mantua, N. J., S.R. Hare, Y. Zhang, J.M. Wallace, and R.C. Francis, 1997: A Pacific interdecadal climate oscillation with impacts on salmon production. Bulletin of the American Meteorological Society, 78, 1069-1079.

Spencer, R.W., W. D. Braswell, J. R. Christy, and J. Hnilo, 2007. Cloud and radiation budget changes associated with tropical intraseasonal oscillations, Geophys. Res. Lett., 34, L15707 doi:10.1029/2007GL029698.

Spencer, R.W., and W.D. Braswell, 2008. Potential biases in cloud feedback diagnosis: A simple model demonstration, J. Climate, 21, 5624-5628.

Trenberth, K.E.., and J.T. Fasullo, 2009. Global warming due to increasing absorbed solar radiation. Geophysical Research Letters, 36, L07706, doi:10.1029/2009GL037527.

Tsonis, A. A., K. Swanson, and S. Kravtsov, 2007. A new dynamical mechanism for major climate shifts. Geophysical Research Letters, 34, L13705, doi:10.1029/2007GL030288.

Home/Blog

Home/Blog