UPDATE: I’ve appended the results for the U.S. only, which shows evidence that CRUTem3 has overstated U.S. warming trends during 1973-2011 by at least 50%.

The supposed gold standard in surface temperature data is that produced by Univ. of East Anglia, the so-called CRUTem3 dataset. There has always been a lingering suspicion among skeptics that some portion of this IPCC official temperature record contains some level of residual spurious warming due to the urban heat island effect. Several published papers over the years have supported that suspicion.

The Urban Heat Island (UHI) effect is familiar to most people: towns and cities are typically warmer than surrounding rural areas due to the replacement of natural vegetation with manmade structures. If that effect increases over time at thermometer sites, there will be a spurious warming component to regional or global temperature trends computed from the data.

Here I will show based upon unadjusted International Surface Hourly (ISH) data archived at NCDC that the warming trend over the Northern Hemisphere, where virtually all of the thermometer data exist, is a function of population density at the thermometer site.

Depending upon how low in population density one extends the results, the level of spurious warming in the CRUTem3 dataset ranges from 14% to 30% when 3 population density classes are considered, and even 60% with 5 population classes.

DATA & METHOD

Analysis of the raw station data is not for the faint of heart. For the period 1973 through 2011, there are hundreds of thousands of data files in the NCDC ISH archive, each file representing one station of data from one year. The data volume is many gigabytes.

From these files I computed daily average temperatures at each station which had records extending back at least to 1973, the year of a large increase in the number of global stations included in the ISH database. The daily average temperature was computed from the 4 standard synoptic times (00, 06, 12, 18 UTC) which are the most commonly reported times from stations around the world.

At least 20 days of complete data were required for a monthly average temperature to be computed, and the 1973-2011 period of record had to be at least 80% complete for a station to be included in the analysis.

I then stratified the stations based upon the 2000 census population density at each station; the population dataset I used has a spatial resolution of 1 km.

I then accepted all 5×5 deg lat/lon grid boxes (the same ones that Phil Jones uses in constructing the CRUTem3 dataset) which had all of the following present: a CRUTem3 temperature, and at least 1 station from each of 3 population classes, with class boundaries at 0, 15, 500, and 30,000 persons per sq. km.

By requiring all three population classes to be present for grids to be used in the analysis, we get the best ‘apples-to-apples’ comparison between stations of different population densities. The downside is that there is less geographic coverage than that provided in the Jones dataset, since relatively few grids meet such a requirement.

But the intent here is not to get a best estimate of temperature trends for the 1973-2011 period; it is instead to get an estimate of the level of spurious warming in the CRUTem3 dataset. The resulting number of 5×5 deg grids with stations from all three population classes averaged around 100 per month during 1973 through 2011.

RESULTS

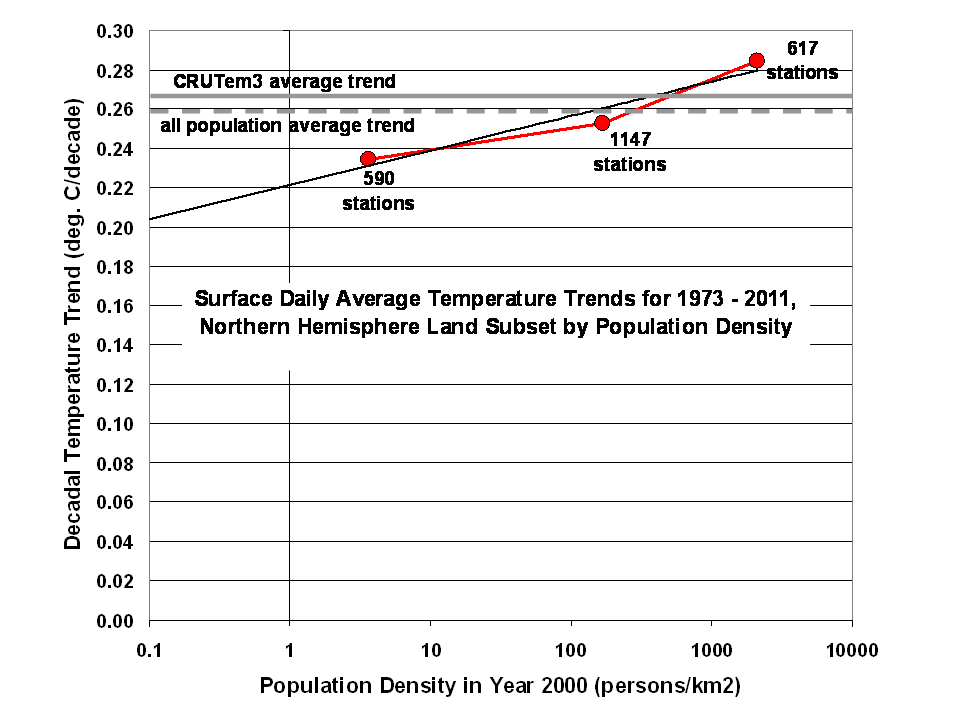

The results are shown in the following figure, which indicates that the lower the population density surrounding a temperature station, the lower the average linear warming trend for the 1973-2011 period. Note that the CRUTem3 trend is a little higher than simply averaging all of the accepted ISH stations together, but not as high as when only the highest population stations were used.

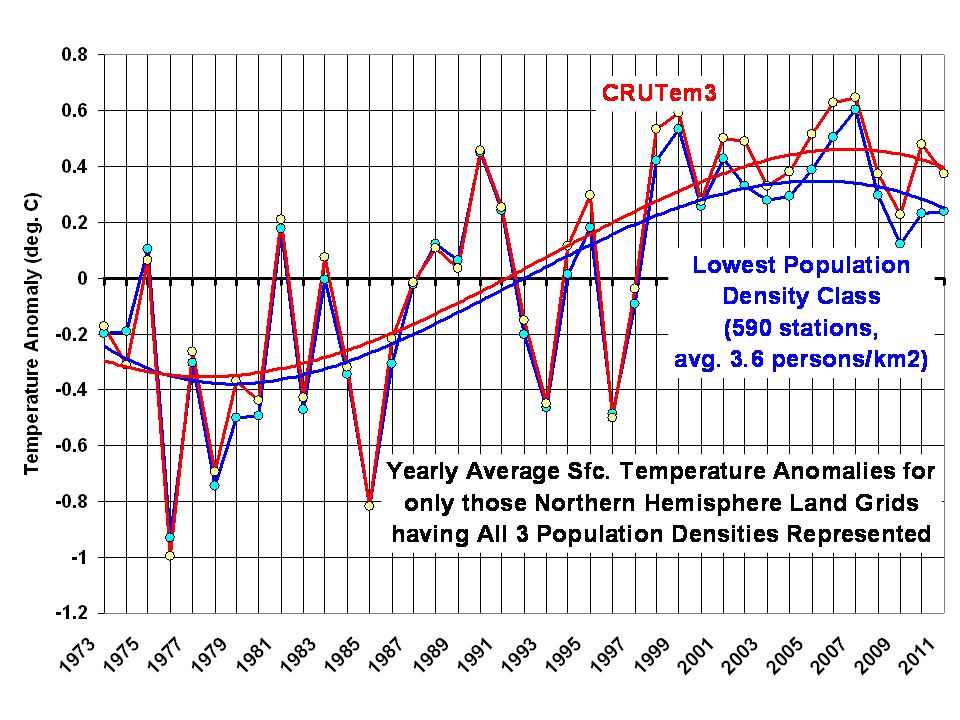

The CRUTem3 and lowest population density temperature anomaly time series which go into computing these trends are shown in the next plot, along with polynomial fits to the data:

Again, the above plot is not meant to necessarily be estimates for the entire Northern Hemispheric land area, but only those 5×5 deg grids where there are temperature reporting stations representing all three population classes.

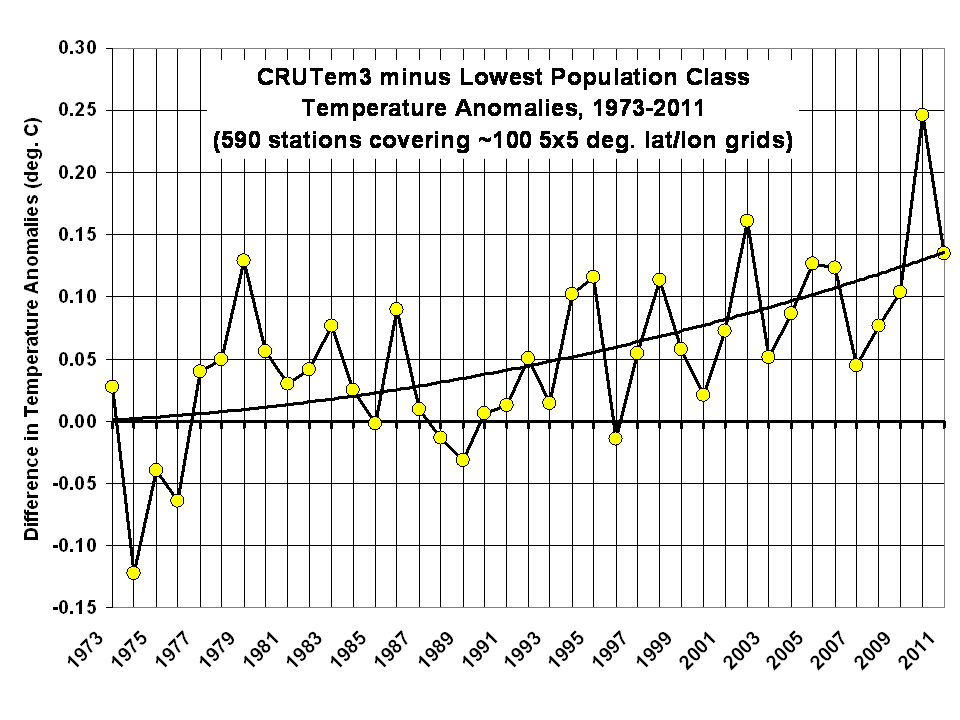

The difference between these two temperature traces is shown next:

From this last plot, we see in recent years there appears to be a growing bias in the CRUTem3 temperatures versus the temperatures from the lowest population class.

The CRUTem3 temperature linear trend is about 15% warmer than the lowest population class temperature trend. But if we extrapolate the results in the first plot above to near-zero population density (0.1 persons per sq. km), we get a 30% overestimate of temperature trends from CRUTem3.

If I increase the number of population classes from 3 to 5, the CRUTem3 trend is overestimated by 60% at 0.1 persons per sq. km, but the number of grids which have stations representing all 5 population classes averages only 10 to 15 per month, instead of 100 per month. So, I suspect those results are less reliable.

I find the above results to be quite compelling evidence for what Anthony Watts, Pat Michaels, Ross McKitrick, et al., have been emphasizing for years: that poor thermometer siting has likely led to spurious warming trends, which has then inflated the official IPCC estimates of warming. These results are roughly consistent with the McKitrick and Michaels (2007) study which suggested as much as 50% of the reported surface warming since 1980 could be spurious.

I would love to write this work up and submit it for publication, but I am growing weary of the IPCC gatekeepers killing my papers; the more damaging any conclusions are to the IPCC narrative, the less likely they are to be published. That’s the world we live in.

UPDATE:

I’ve computed results for just the United States, and these are a little more specific. The ISH stations were once again stratified by local population density. Temperature trends were computed for each station individually, and the upper and lower 5% trend ‘outliers’ in each of the 3 population classes were excluded from the analysis. For each population class, I also computed the ‘official’ CRUTem3 trends, and averaged those just like I averaged the ISH station data.

The results in the following plot show that for the 87 stations in the lowest population class, the average CRUTem3 temperature trend was 57% warmer than the trend computed from the ISH station data.

These are apples-to-apples comparisons…for each station trend included in the averaging for each population class, a corresponding, nearest-neighbor CRUTem3 trend was also included in the averaging for that population class.

How can one explain such results, other than to conclude that there is spurious warming in the CRUTem3 dataset? I already see in the comments, below, that there are a few attempts to divert attention from this central issue. I would like to hear an alternative explanation for such results.

added 15:07 CDT: BTW, the lowest population class results come from approx. 4 million temperature measurements.

Home/Blog

Home/Blog