(last edited 8:30 a.m. 1 June 2009 for clarity)

I occasionally hear the complaint that some of what I write is too technical to understand, which I’m sure is true. The climate system is complex, and discussing the scientific issues associated with global warming (aka “climate change”) can get pretty technical pretty fast.

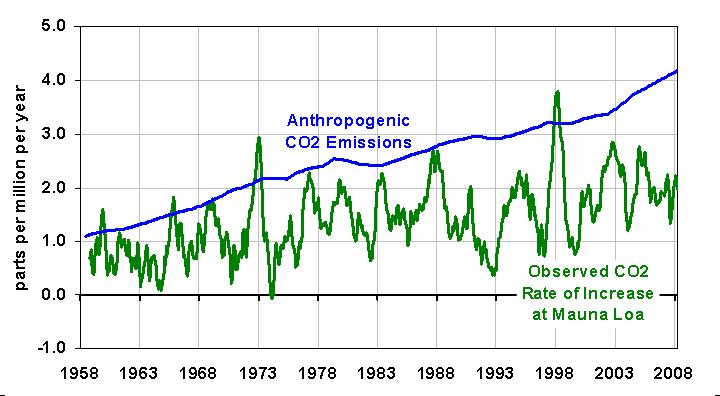

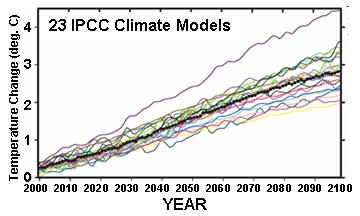

Fortunately, the most serious problem the climate models have (in my view) is one which is easily understood by the public. So, I’m going to make yet another attempt at explaining why the computerized climate models tracked by the U.N.’s Intergovernmental Panel on Climate Change (IPCC) – all 23 of them – predict too much warming for our future. The basic problem I am talking about has been peer reviewed and published by us, and so cannot be dismissed lightly.

But this time I will use no graphs (!), and I will use only a single number (!!) which I promise will be a small one. 😉

I will do this in three steps. First, I will use the example of a pot of water on the stove to demonstrate why the temperature of things (like the Earth) rises or falls.

Secondly, I will describe why so many climate model “experts” believe that adding CO2 to the atmosphere will cause the climate system to warm by a large, possibly catastrophic amount.

Finally, I will show how Mother Nature has fooled those climate experts into programming climate models to behave incorrectly.

Some of this material can be found scattered through other web pages of mine, but here I have tried to create a logical progression of the most important concepts, and minimized the technical details. It might be edited over time as questions arise and I find better ways of phrasing things.

The Earth’s Climate System Compared to a Pot of Water on the Stove

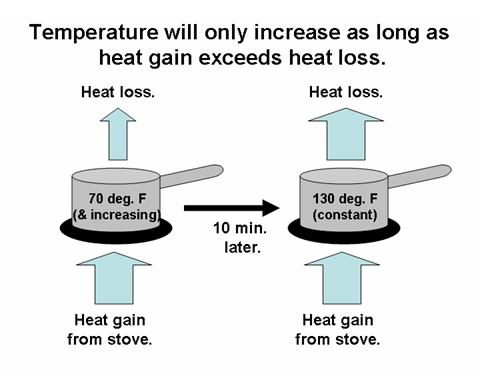

Before we discuss what can alter the global-average temperature, let’s start with the simple example of a pot of water placed on a stove. Imagine it’s a gas stove, and the flame is set on its lowest setting, so the water will become warm but will not boil. To begin with, the pot does not have a lid.

Obviously, the heat from the flame will warm the water and the pot, but after about 10 minutes the temperature will stop rising. The pot stops warming when it reaches a point of equilibrium where the rate of heat loss by the pot to its cooler surroundings equals the rate of heat gained from the stove. The pot warmed as long as an imbalance in those two flows of energy existed, but once the magnitude of heat loss from the hot pot reached the same magnitude as the heat gain from the stove, the temperature stopped changing.

Now let’s imagine we turn the flame up slightly. This will result in a temporary imbalance once again between the rate of energy gain and energy loss, which will then cause the pot to warm still further. As the pot warms, it loses energy even more rapidly to its surroundings. Finally, a new, higher temperature is reached where the rate of energy loss and energy gain are once again in balance.

But there’s another way to cause the pot to warm other than to add more heat: We can reduce its ability to cool. If next we place a lid on the pot, the pot will warm still more because the rate of heat loss is then reduced below the rate of heat gain from the stove. In this case, loosely speaking, the increased temperature of the pot is not because more heat is added, but because less heat is being allowed to escape.

Global Warming

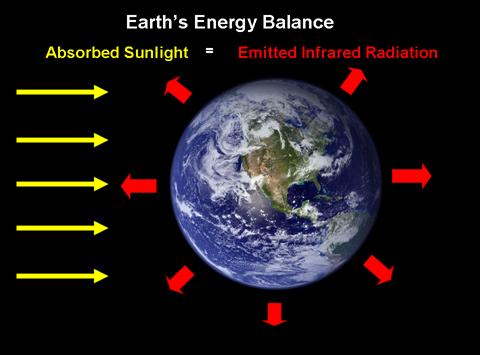

The example of what causes a pot of water on a stove to warm is the same fundamental situation that exists with climate change in general, and global warming theory in particular. A change in the energy flows in or out of the climate system will, in general, cause a temperature change. The average temperature of the climate system (atmosphere, ocean, and land) will remain about the same only as long as the rate of energy gain from sunlight equals the rate of heat loss by infrared radiation to outer space. This is illustrated in the following cartoon:

Again, the average temperature of the Earth (like a pot of water on the stove) will only change when there is an imbalance between the rates of energy gained and energy lost.

What this means is that anything that can change the rates of energy flow illustrated above — in or out of the climate system — can cause global warming or global cooling.

In the case of manmade global warming, the extra carbon dioxide in the atmosphere is believed to be reducing the rate at which the Earth cools to outer space. This already occurs naturally through the so-called “greenhouse effect” of the atmosphere, a process in which water vapor, clouds, carbon dioxide and methane act as a ‘radiative blanket’, insulating the lower atmosphere and the surface, and raising the Earth’s average surface temperature by an average of 33 deg. C (close to 60 deg. F).

The Earth’s natural greenhouse effect is like the lid on our pot of water on the stove. The lid reduces the pot’s ability to cool and so makes the pot of water, on average, warmer than it would be without the lid. (I don’t think you will find the greenhouse effect described elsewhere in terms of an insulator — like a blanket — but I believe that is the most accurate analogy.) Similarly, the Earth’s natural greenhouse effect keeps the lower atmosphere and surface warmer than if there was no greenhouse effect. So, more CO2 in the atmosphere slightly enhances that effect.

And also like the pot of water, the other basic way to cause warming is to increase the rate of energy input — in the case of the Earth, sunlight. Note that this does not necessarily require an increase in the output of the sun. A change in any of the myriad processes that control the Earth’s average cloud cover can also do this. For instance, the IPCC talks about manmade particulate pollution (“aerosols”) causing a change in global cloudiness…but they never mention the possibility that the climate system can change its own cloud cover!

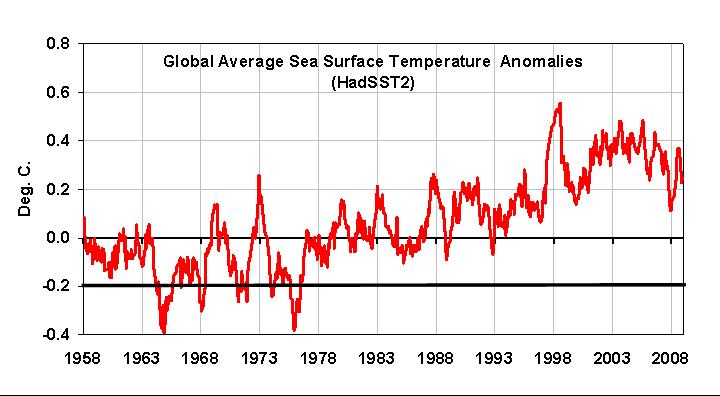

If the amount of cloud cover reflecting sunlight back to space decreases from, say, a change in oceanic and atmospheric circulation patterns, then more sunlight will be absorbed by the ocean. As a result, there will then be an imbalance between the infrared energy lost and solar energy gained by the Earth. The ocean will warm as a result of this imbalance, causing warmer and more humid air masses to form and flow over the continents, which would then cause the land to warm, too.

The $64 Trillion Question: By How Much Will the Earth Warm from More CO2?

Now for a magic number that we will be referring to later, which is how much more energy is lost to outer space as the Earth warms. It can be calculated theoretically that for every 1 deg C the Earth warms, it gives off an average of about 3.3 Watts per square meter more infrared energy to space. Just as you feel more infrared (heat) radiation coming from a hot stove than from a warm stove, the Earth gives off more infrared energy to space the warmer it gets.

This is part of the climate system’s natural cooling mechanism, and all climate scientists agree with this basic fact. What we don’t agree on is how the climate system responds to warming by either enhancing, or reducing, this natural cooling mechanism. The magic number — 3.3 Watts per sq. meter — represents how much extra energy the Earth loses if ONLY the temperature is increased, by 1 deg. C, and nothing else is changed. In the real world, however, we can expect that the rest of the climate system will NOT remain the same in response to a warming tendency.

Thus, the most important debate is global warming research today is the same as it was 20 years ago: How will clouds (and to a lesser extent other elements in the climate system) respond to warming, thereby enhancing or reducing the warming? These indirect changes that further influence temperature are called feedbacks, and they determine whether manmade global warming will be catastrophic, or just lost in the noise of natural climate variability.

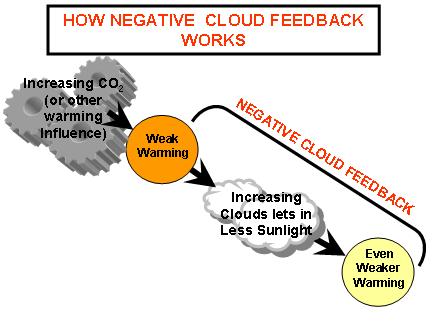

Returning to our example of the whole Earth warming by 1 deg. C, if that warming causes an increase in cloud cover, then the 3.3 Watts of extra infrared loss to outer space gets augmented by a reduction in solar heating of the Earth by the sun. The result is a smaller temperature rise. This is called negative feedback, and is can be illustrated conceptually like this:

If negative feedback exists in the real climate system, then manmade global warming will become, for most practical purposes, a non-issue.

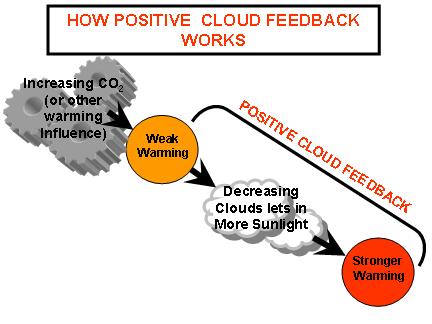

But this is not how the IPCC thinks nature works. They believe that cloud cover of the Earth decreases with warming, which would let in more sunlight and cause the Earth to warm to an even higher temperature. (The same is true if the water vapor content of the atmosphere increases with warming, since water vapor is our main greenhouse gas.) This is called positive feedback, and all 23 climate models tracked by the IPCC now exhibit positive cloud and water vapor feedback. The following illustration shows conceptually how positive feedback works:

In fact, the main difference between models that predict only moderate warming versus those that predict strong warming has been traced to the strength of their positive cloud feedbacks.

How Mother Nature Fooled the World’s Top Climate Scientists

Obviously, the question of how clouds in the REAL climate system respond to a warming tendency is of paramount importance, because that guides the development and testing of the climate models. Ultimately, the models must be based upon the observed behavior of the atmosphere.

So, what IS observed when the Earth warms? Do clouds increase or decrease? While the results vary with which years are analyzed, it has often been found that warmer years have less cloud cover, not more.

And this has led to the ‘scientific consensus’ that cloud feedbacks in the real climate system are probably positive, although by an uncertain amount. And if cloud feedbacks end up being too strongly positive, then we are in big trouble from manmade global warming.

But at this point an important question needs to be asked that no one asks: When the climate system experiences a warm year, what caused the warming? By definition, cloud feedback can not occur unless the temperature changes…but what if that temperature change was caused by clouds in the first place?

This is important because if decreasing cloud cover caused warming, and this has been mistakenly interpreted as warming causing a decrease in cloud cover, then positive feedback will have been inferred even if the true feedback in the climate system is negative.

As far as I know, this potential mix-up between cause and effect — and the resulting positive bias in diagnosed feedbacks — had never been studied until we demonstrated it in a peer-reviewed paper in the Journal of Climate. Unfortunately, because climate research covers such a wide range of specialties, most climate experts are probably not even aware that our paper exists.

So how do we get around this cause-versus-effect problem when observing natural climate variations in our attempt to identify feedback? Our very latest research, now in peer review for possible publication in the Journal of Geophysical Research, shows that one can separate, at least partially, the effects of clouds-causing-temperature-change (which “looks like” positive feedback) versus temperature-causing-clouds to change (true feedback).

We analyzed 7.5 years of our latest and best NASA satellite data and discovered that, when the effect of clouds-causing-temperature-change is accounted for, cloud feedbacks in the real climate system are strongly negative. The negative feedback was so strong that it more than cancelled out the positive water vapor feedback we also found. It was also consistent with evidence of negative feedback we found in the tropics and published in 2007.

In fact, the resulting net negative feedback was so strong that, if it exists on the long time scales associated with global warming, it would result in only 0.6 deg. C of warming by late in this century.

Natural Cloud Variations: The Missing Piece of the Puzzle?

In this critical issue of cloud feedbacks – one which even the IPCC has admitted is their largest source of uncertainty — it is clear that the effect of natural cloud variations on temperature has been ignored. In simplest of terms, cause and effect have been mixed up. (Even the modelers will have to concede that clouds-causing-temperature change exists because we found clear evidence of it in every one of the IPCC climate models we studied.)

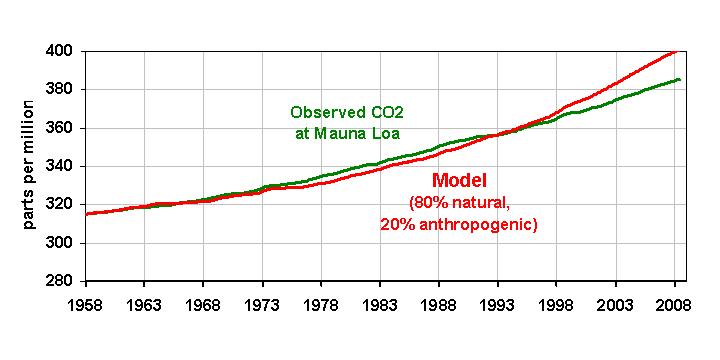

But this brings up another important question: What if global warming itself has been caused by a small, long-term, natural change in global cloud cover? Our observations of global cloud cover have not been long enough or accurate enough to document whether any such cloud changes have happened or not. Some indirect evidence that this has indeed happened is discussed here.

Even though they never say so, the IPCC has simply assumed that the average cloud cover of the Earth does not change, century after century. This is a totally arbitrary assumption, and given the chaotic variations that the ocean and atmosphere circulations are capable of, it is probably wrong. Little more than a 1% change in cloud cover up or down, and sustained over many decades, could cause events such as the Medieval Warm Period or the Little Ice Age.

As far as I know, the IPCC has never discussed their assumption that global average cloud cover always stays the same. The climate change issue is so complex that most experts have probably not even thought about it. But we meteorologists by training have a gut feeling that things like this do indeed happen. In my experience, a majority of meteorologists do not believe that mankind is mostly to blame for global warming. Meteorologists appreciate how complex cloud behavior is, and most tend to believe that climate change is largely natural.

Our research has taken this gut feeling and demonstrated with both satellite data and a simple climate model, in the language that climate modelers speak, how potentially serious this issue is for global warming theory.

And this cause-versus-effect issue is not limited to just clouds. For instance, there are processes that can cause the water vapor content of the atmosphere to change, mainly complex precipitation processes, which will then change global temperatures. Precipitation is what limits how much of our main greenhouse gas, water vapor, is allowed to accumulate in the atmosphere, thus preventing a runaway greenhouse effect. For instance, a small change in wind shear associated with a change in atmospheric circulation patterns, could slightly change the efficiency with which precipitation systems remove water vapor, leading to global warming or global cooling. This has long been known, but again, climate change research covers such a wide range of disciplines that very few of the experts have recognized the importance of obscure published studies like this one.

While there are a number of other potentially serious problems with climate model predictions, the mix-up between cause and effect when studying cloud behavior, by itself, has the potential to mostly deflate all predictions of substantial global warming. It is only a matter of time before others in the climate research community realize this, too.

Home/Blog

Home/Blog