The climate change deniers have no one but themselves to blame for last night’s vote.

I’m talking about those who deny NATURAL climate change. Like Al Gore, John Holdren, and everyone else who thinks climate change was only invented since they were born.

Politicians formed the IPCC over 20 years ago with an endgame in mind: to regulate CO2 emissions. I know, because I witnessed some of the behind-the-scenes planning. It is not a scientific organization. It was organized to use the government-funded scientific research establishment to achieve policy goals.

Now, that’s not necessarily a bad thing. But when they are portrayed as representing unbiased science, that IS a bad thing. If anthropogenic global warming – and ocean ‘acidification’ (now there’s a biased and totally incorrect term) — ends up being largely a false alarm, those who have run the IPCC are out of a job. More on that later.

I don’t want to be misunderstood on this. IF we are destroying the planet with our fossil fuel burning, then something SHOULD be done about it.

But the climate science community has allowed itself to be used on this issue, and as a result, politicians, activists, and the media have successfully portrayed the biased science as settled.

They apparently do not realize that ‘settled science’ is an oxymoron.

The most vocal climate scientists defending the IPCC have lost their objectivity. Yes, they have what I consider to be a plausible theory. But they actively suppress evidence to the contrary, for instance attempts to study natural explanations for recent warming.

That’s one reason why the public was so outraged about the ClimateGate e-mails. ClimateGate doesn’t prove their science is wrong…but it does reveal their bias. Science progresses by investigating alternative explanations for things. Long ago, the IPCC all but abandoned that search.

Oh, they have noted (correctly I believe) that a change in the total output of the sun is not to blame. But there are SO many other possibilities, and all they do is dismiss those possibilities out of hand. They have a theory — more CO2 is to blame — and they religiously stick to it. It guides all of the research they do.

The climate models are indeed great accomplishments. It’s what they are being used for that is suspect. A total of 23 models cover a wide range of warming estimates for our future, and yet there is no way to test them for what they are being used for! climate change predictions.

Virtually all of the models produce decadal time scale warming that exceeds what we have observed in the last 15 years. That fact has been known for years, but its publication in the peer reviewed literature continues to be blocked.

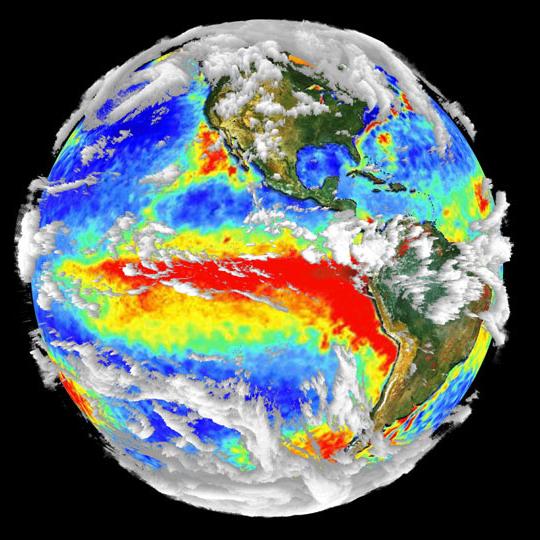

My theory is that a natural change in cloud cover has caused most of the recent warming. Temperature proxy data from around the world suggests that just about every century in the last 2,000 years has experienced warming or cooling. Why should today’s warmth be manmade, when the Medieval Warm Period was not? Just because we finally have one potential explanation – CO2?

This only shows how LITTLE we understand about climate change…not how MUCH we know.

Why would scientists allow themselves to be used in this way? When I have pressed them on the science over the years, they all retreat to the position that getting away from fossil fuels is the ‘right thing to do anyway’.

In other words, they have let their worldviews, their politics, their economic understanding (or lack thereof) affect their scientific judgment. I am ashamed for our scientific discipline and embarrassed by their behavior.

Is it any wonder that scientists have such a bad reputation among the taxpayers who pay them to play in their ivory tower sandboxes? They can make gloom and doom predictions all day long of events far in the future without ever having to suffer any consequences of being wrong.

The perpetual supply of climate change research money also biases them. Everyone in my business knows that as long as manmade climate change remains a serious threat, the money will continue to flow, and climate programs will continue to grow.

Now, I do agree the supply of fossil fuels is not endless. But we will never actually “run out”…we will just slowly stop trying to extract them as they become increasingly scarce (translation – more expensive). That’s the way the world works.

People who claim we are going to wake up one morning and our fossil fuels will be gone are either pandering, or stupid, or both.

But how you transition from fossil fuels to other sources of energy makes all the difference in the world. Making our most abundant and affordable sources of energy artificially more expensive with laws and regulations will end up killing millions of people.

And that’s why I speak out. Poverty kills. Those who argue otherwise from their positions of fossil-fueled health and wealth are like spoiled children.

The truly objective scientist should be asking whether MORE, not less, atmospheric carbon dioxide is what we should be trying to achieve. There is more published real-world evidence for the benefits of more carbon dioxide, than for any damage caused by it. The benefits have been measured, and are real-world. The risks still remain theoretical.

Carbon dioxide is necessary for life on Earth. That it has been so successfully demonized with so little hard evidence is truly a testament to the scientific illiteracy of modern society. If humans were destroying CO2 — rather than creating more — imagine the outrage there would be at THAT!

I would love the opportunity to cross examine these (natural) climate change deniers in a court of law. They have gotten away with too much, for too long. Might they be right? Sure. But the public has no idea how flimsy – and circumstantial – their evidence is.

In the end, I doubt the IPCC will ever be defunded. Last night’s vote in the House is just a warning shot across the bow. But unless the IPCC starts to change its ways, it runs the risk of being totally marginalized. It has almost reached that point, anyway.

And maybe the IPCC leadership doesn’t really care if its pronouncements are ignored, as long as they can jet around the world to meet in exotic destinations and plan where their next meeting should be held. I hear it’s a pretty good gig.

Home/Blog

Home/Blog