It is sometimes said that climate models are built upon physical first principles, as immutable as the force of gravity or conservation of energy (which are, indeed, included in the models). But this is a half-truth, at best, spoken by people who either don’t know any better or are outright lying.

The most physically sound portion of global warming predictions is that adding CO2 to the atmosphere causes about a 1% energy imbalance in the system (energy imbalances are what cause temperature to change), and if nothing else but the temperature changes, there would only be about a 1 deg. C warming response to a doubling of atmospheric CO2 (we aren’t even 50% of the way to doubling).

But this is where the reasonably sound, physical first principles end (if you can even call them that since the 1 deg estimate is a theoretical calculation, anyway).

Most of the model-predicted warming people are worried about is not due to this 1 deg., which would likely be benign or even beneficial. It’s the additional 2 deg. or more of warming many models produce, which originates from highly uncertain positive feedbacks: how clouds, water vapor, and other features of the climate system change with warming, which then further amplify the warming.

Cloud Feedback

These positive feedbacks, if they even exist, are in effect what scare the wits out of people. You could “dial in” positive feedback just by (for example) making the average amount of low cloud in a model decrease slightly as the average temperature warms.

It’s not this simple, of course, but my point is that ‘worrisome warming’ is caused in the models by highly parameterized (i.e. simplified) and uncertain components buried deep amongst the models’ real physical principles. The physics involved in cloud formation are, for the most part, not included in climate models. Even cloud resolving models, which do “grow” clouds, depend upon uncertain parameterizations (e.g. the autoconversion threshhold).

So, what are the feedbacks in the real climate system? Unfortunately, they are notoriously hard to measure.

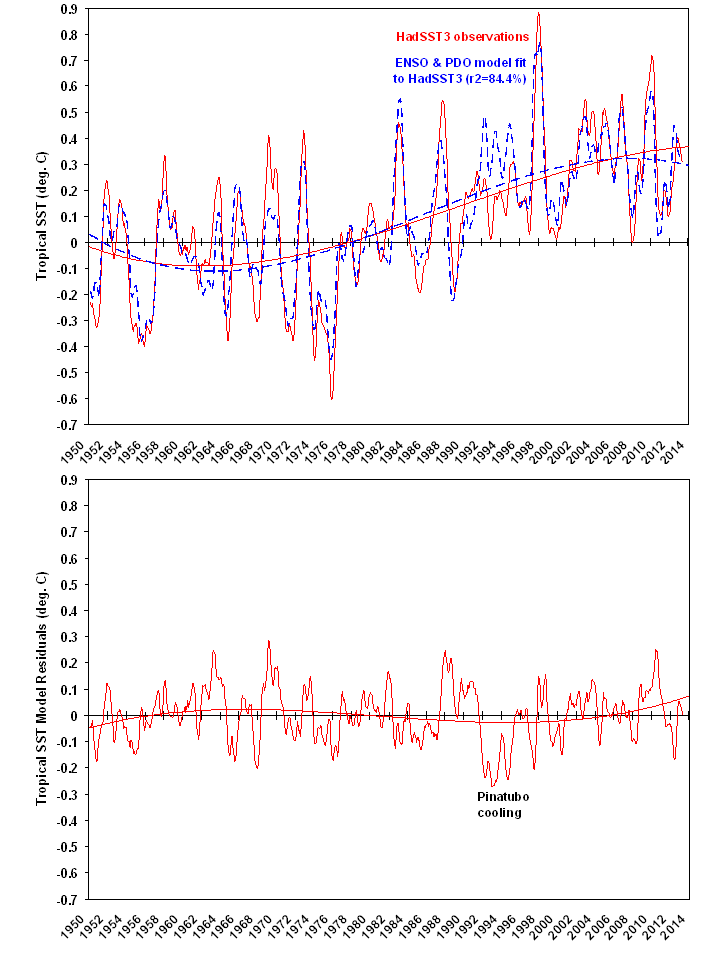

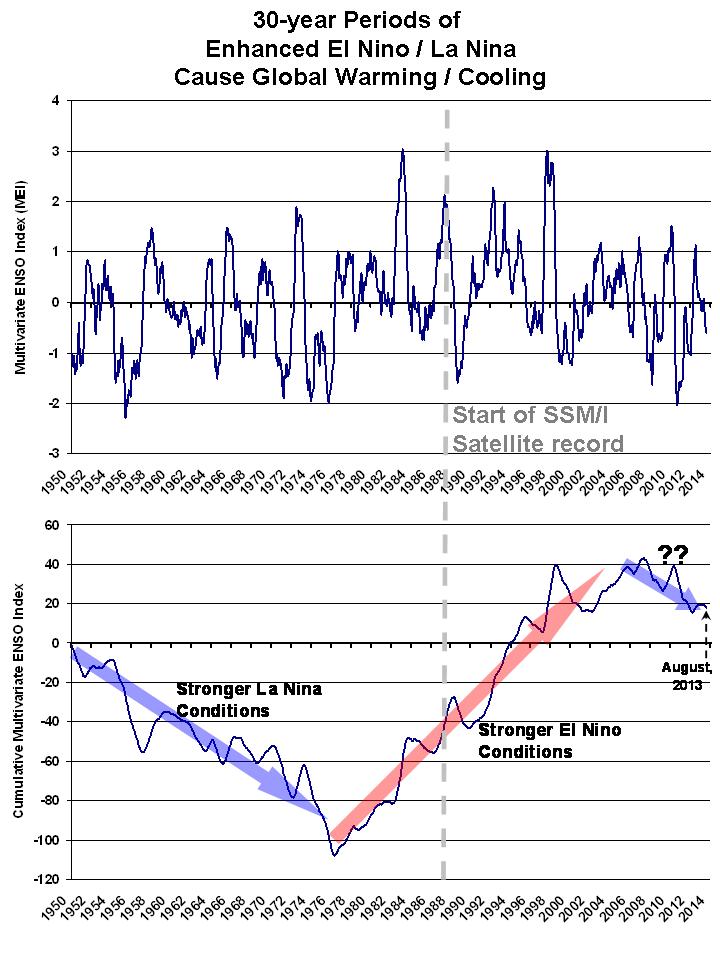

The main reason, I believe, is related to cause and effect. When we observe that natural warming events (say from El Nino) are typically associated with (say) fewer clouds, we don’t know whether the fewer clouds were caused by the warming (positive cloud feedback), or whether the fewer clouds are the cause of some of the warming, and negative feedback actually exists.

We have demonstrated that if fewer clouds cause warming, and then the warming causes more clouds (negative feedback), the net result still “looks like” positive cloud feedback. Dick Lindzen and co-authors have also published various results substantiating this view. Evidence for this is revealed by phase space analysis of the data, which goes beyond simple scatter plots which typically reveal very low correlations.

This is important because it shows that what climate modelers think is observational evidence for positive cloud feedback in the real climate system (which they then program into their climate models) is simply the result of sloppy and overly simplistic data analysis techniques.

Water Vapor Feedback

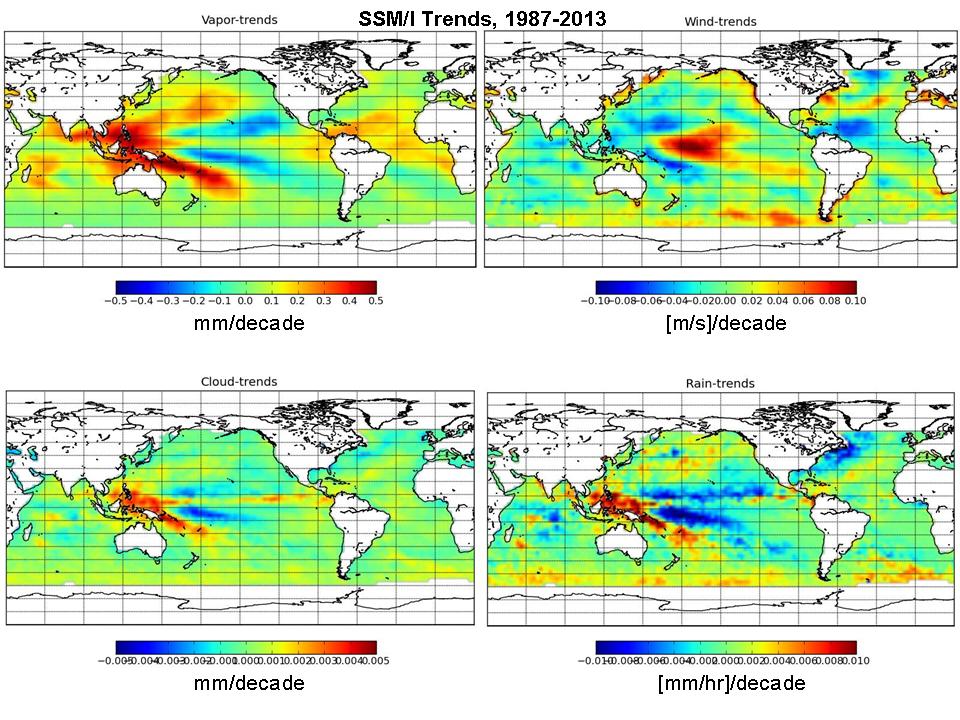

The other major feedback is water vapor, which approximately doubles the 1 deg of first principles warming in the models. Here the modelers believe they are on firmer ground than for cloud feedbacks, since there is plenty of observational evidence that warming is associated with more atmospheric water vapor, on average, in the lower troposphere, due to increased surface evaporation caused by warmer temperatures.

But even in the case of water vapor feedback, the situation might not be as simple as they believe. By far the biggest impact of water vapor on the Earth’s ability to cool itself is in the middle and upper troposphere, where it is precipitation processes – not surface evaporation — that determine the water vapor content.

Most of the air at these altitudes was detrained out of precipitation systems, which removed most of the vapor as precipitation. This is why the water vapor content at those altitudes is so low.

So, what determines the efficiency of precipitation systems? If warming increases their efficiency at removing vapor, there could be a slight drying of the middle and upper troposphere at the same time that the lower troposphere becomes more humid. The net result would be negative water vapor feedback, even though the total absolute amount of water vapor in the troposphere has increased (because a tiny decrease in upper tropospheric vapor causes more cooling than a large increase in lower tropospheric vapor causes warming).

This possibility is nothing new; it’s been known for decades (see an extended water vapor feedback discussion here). Long-term weather balloon data we have extending back to the 1950s actually shows lower tropospheric moistening and mid-tropospheric drying, at least suggesting the possibility that multi-decadal climate change involves negative, not positive, water vapor feedback. Miskolczi’s (2010) results of a constant greenhouse effect were basically due to the observed decrease in upper tropospheric water vapor exactly offsetting the greenhouse enhancement of increasing CO2 in the last 50 years.

The trouble is that we have very little knowledge of how the efficiency of precipitation systems changes with warming, and so climate models can’t even begin to address the physical principles involved.

Conclusion

These are just a few of the uncertainties involved in climate models’ predictions of non-trivial warming. Only the first 1 deg. of warming they produce can be considered to be from first principles, and even that is based upon a theoretical calculation.

Warming in excess of 1 deg., which is what people worry most about, is due to highly uncertain feedbacks. To the extent that the models agree on these feedbacks is probably more a reflection of group think on the part of the modelers, and the pressure they are under (political, financial, career, world-view, etc.) to produce results which support global warming as being a more than just a trivial concern for humanity.

One aspect of feedbacks I have been wondering about for years is related to their possible time-scale dependence. By way of analogy, we see positive feedbacks in the formation of weather systems, sometimes culminating in hurricanes or tornadoes. But these are ‘dissipational structures’, which concentrate energy only briefly as part of an overall process that ends up dissipating energy on longer time scales.

Might it be that some feedbacks in the climate system are indeed positive for short term (e.g. year-to-year) variability, but the long-term feedbacks end up being negative, as suggested (for example) by the decrease in upper tropospheric water vapor since the 1950s? If that is the case, the models should not be built to have long-term feedbacks based upon short-term data.

Ultimately, the question we have to answer is this: Do we really believe that nature amplifies any temperature influence humans have on the climate system, or minimizes it? For now, the IPCC is betting on amplification.

But when you are asked to believe the IPCC’s pronouncements about the serious threat of climate change, understand that the greater the model warming they point to, the more suspect is the underlying evidence. This ‘dubious’ component of model-predicted warming is caused by relative few lines of computer code –- the weak link in the chain — buried among thousands of lines of code which might otherwise be performing very realistically.

[NOTE for engineers: In climate parlance, positive feedbacks never overcome the climate stabilizing influence of the direct increase in infrared radiative cooling with temperature, called the Stefan-Boltzmann or Planck effect. So, in traditional engineering ‘feedback’ terms, even the IPCC’s climate models are stabilized by net negative feedback…the Planck effect just isn’t called a “feedback” by climate people. Sorry for the confusion…I didn’t make the rules.]

Home/Blog

Home/Blog