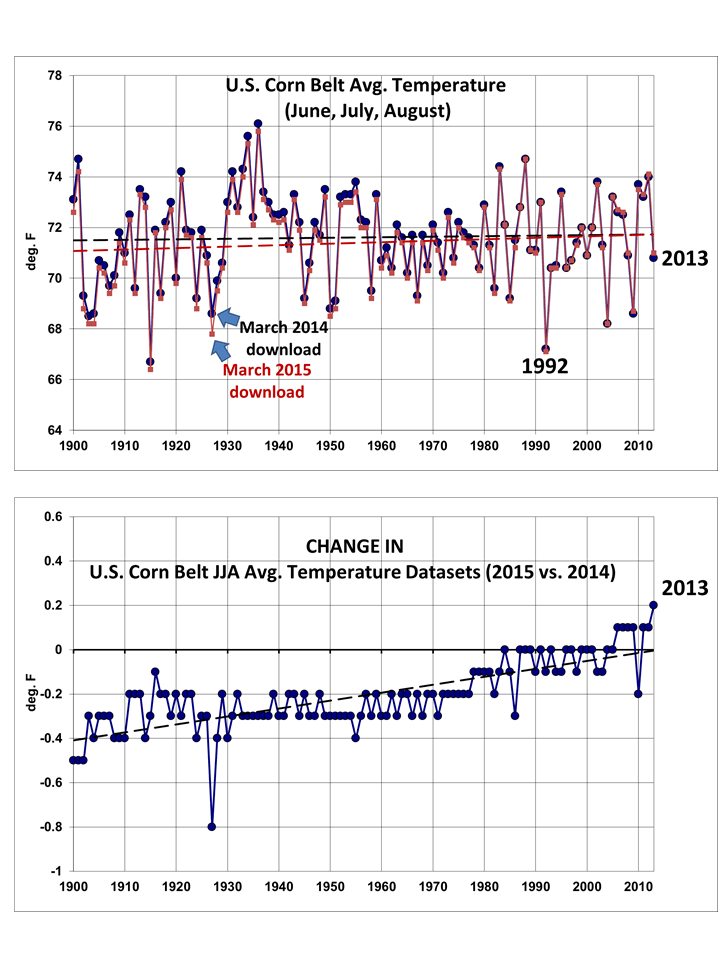

I was updating a U.S. Corn Belt summer temperature and precipitation dataset from the NCDC website, and all of a sudden the no-warming-trend-since-1900 turned into a significant warming trend. (Clarification: the new warming trend for 1900-2013 is still not significantly different from zero at the 90% confidence level. H/T, Pat Michaels)

As can be seen in the following chart, the largest adjustments were to earlier years in the dataset, which were made colder. The change in the linear trend goes from 0.2 deg F/century to 0.6 deg. F/century.

I know others have commented on the tendency of thermometer data adjustments by NOAA always leading to greater warming.

As Dick Lindzen has noted, it seems highly improbable that successive revisions to the very same data would lead to ever greater warming trends. Being the co-developer of a climate dataset (UAH satellite temperatures) I understand the need to make adjustments for known errors in the data…when you can quantitatively demonstrate an error exists.

But a variety of errors in data measurement and collection would typically have both positive and negative signs. For example, orbit decay causes a spurious cooling trend in the satellite lower tropospheric temperatures (discovered by RSS), while the instrument body temperature effect causes a spurious warming trend (discovered by us). The two effects approximately cancel out over the long term, but we (and RSS) make corrections for them anyway since they affect different years differently.

Also, the drift in satellite local observation time associated with orbit decay causes spurious cooling in the 1:30 satellites, but spurious warming in the 7:30 satellites. Again this shows that a variety of errors typically have positive and negative signs.

In contrast, the thermometer data apparently need to be adjusted in such a way that almost always leads to greater and greater warming trends.

How odd.

Home/Blog

Home/Blog