The NASA CERES project has updated their EBAF-TOA Edition 2.8 radiative flux dataset through March of 2015, which now extends the global CERES record to just over 15 years (since March 2000, starting with NASA’s Terra satellite). This allows us to get an update of how the radiative budget of the Earth responds to surface temperature variations, which is what determines climate sensitivity and thus how much warming (and associated climate change) we can expect from a given amount of radiative forcing (assuming the forcing-feedback paradigm is sufficiently valid for the climate system).

For those who are familiar with my work, I have a strong (and published) opinion on estimating feedback from observed variations in global radiative flux and surface temperature. Dick Lindzen and his co-authors have published on the same issue, and agree with me:

Specifically,

Time-varying radiative forcing in the climate system (e.g. due to increasing CO2, volcanic eruptions, and natural cloud variations) corrupt the determination of radiative feedback.

This is the “cause-versus-effect” issue I have been harping on for years, and discussed extensively in my book, The Great Global Warming Blunder. It is almost trivially simple to demonstrate (e.g. published here, despite the resignation of that journal’s editor [forced by Kevin Trenberth?] for allowing such a sacrilegious thing to be published).

It is also the reason why the diagnosis of feedbacks from the CMIP5 climate models is done using one of two methods that are outside the normal running of those models: either (1) running with an instantaneous and constant large radiative forcing (4XCO2)….so that the resulting radiative changes are then almost all feedback in response to a substantial temperature change being caused by the (constant) radiative forcing; or (2) running a model with a fixed and elevated surface temperature to measure how much the radiative budget of the modeled climate system changes (less optimum because it’s not radiative forcing like global warming, and the resulting model changes are not allowed to alter the surface temperature).

If you try to do it with any climate model in its normal operating mode (which has time-varying radiative forcing), you will almost always get an underestimate of the real feedback operating in the model (and thus an over-estimate of climate sensitivity). We showed this in our Remote Sensing paper. So why would anyone expect anything different using data from the real climate system, as (for example) Andy Dessler has done for cloud feedbacks?

(It is possible *IF* you know the time history of the radiative forcing imposed upon the model, and subtract it out from the model radiative fluxes. That information was not archived for CMIP3, and I don’t know whether it is archived for the CMIP5 model runs).

But what we have in the real climate system is some unknown mixture of radiative forcing(s) and feedback — with the non-feedback radiative variations de-correlating the relationship between radiative feedback and temperature. Thus, diagnosing feedback by comparing observed radiative flux variations to observed surface temperature variations is error-prone…and usually in the direction of high climate sensitivity. (This is because “radiative forcing noise” in the data pushes the regression slope toward zero, which would erroneously indicate a borderline unstable climate system.)

What is necessary is to have non-radiative forced variations in global-average surface temperature sufficiently large that they partly overcome the noise in the data. The largest single source of this non-radiative forcing is El Nino/La Nina, which correspond to a global-average weakening/strengthening of the overturning of the ocean.

It turns out that beating down noise (both measurement and geophysical) can be accomplished somewhat with time-averaging, so 3-monthly to annual averages can be used….whatever leads to the highest correlations.

Also, a time lag of 1 to 4 months is usually necessary because most of the net radiative feedback comes from the atmospheric response to a surface temperature change, which takes time to develop. Again, the optimum time lag is that which provides the highest correlation, and seems to be the longest (up to 4 months) with El Nino and La Nina events.

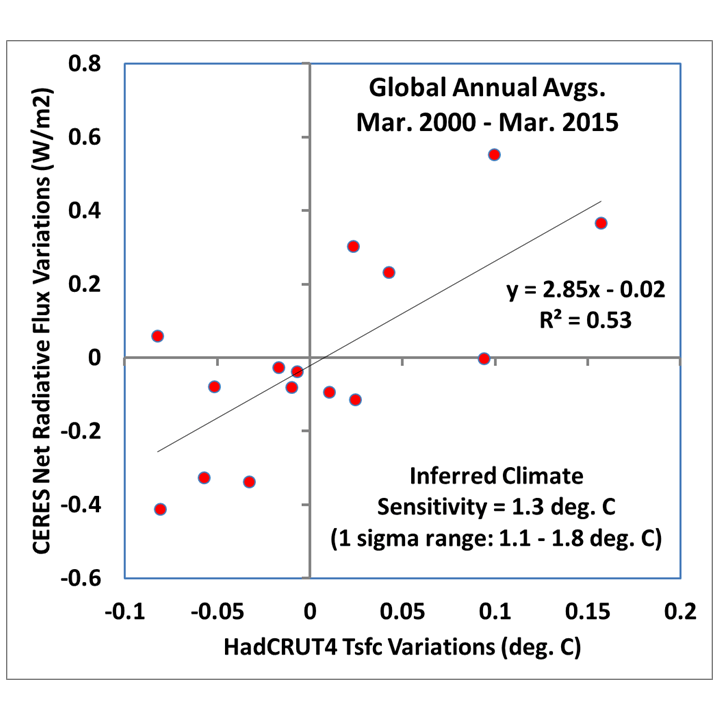

Anyway, here is the result for 15 years of annual CERES net radiative flux variations and HadCRUT4 surface temperature variations, with the radiative flux lagged 4 months after temperature:

Fig. 1. Global, annual area averages of CERES-measured Net radiative flux variations against surface temperature variations from HadCRUT4, with a 4 month time lag to maximize correlation (flux after temperature).

Coincidentally, the 1.3 deg. C best estimate for the climate sensitivity from this graph is the same as we got with our 1D forcing-feedback-mixing climate model, and as I recently got with a simplified model that stores energy in the deep ocean at the observed rate (0.2 W/m2 average since the 1950s).

Again, the remaining radiative forcing in the 15 years of data causes decorrelation and (almost always) an underestimate of the feedback parameter (and overestimate of climate sensitivity). So, the real sensitivity might be well below 1.3 deg. C, as Lindzen believes. The inherent problem in diagnosing feedbacks from observational data is one which I am absolutely sure exists — and it is one which is largely ignored. Most of the “experts” who are part of the scientific consensus aren’t even aware of it, which shows how a small obscure issue can change our perception of how sensitive the climate system is.

This is also just one example of why hundreds (or even thousands) of “experts” agreeing on something as complex as climate change really doesn’t mean anything. It’s just group think in an echo chamber riding on a bandwagon.

Now, one can legitimately argue that the relationship in the above graph is still noisy, and so remains uncertain. But this is the most important piece of information we have to observationally determine how the real climate system responds radiatively to surface temperature changes, which then determines how big a problem global warming might be.

It’s clear that the climate models can be programmed to get just about any climate sensitivity one wants…currently covering a range of about a factor of 3! So, at some point we need to listen to what Mother Nature is telling us. And the above graph tells us that the climate system appears to be more stable than the experts believe.

Home/Blog

Home/Blog