UPDATE: (1300CDT, Sept. 11, 2019). I’ve added a plot of ten CMIP5 models’ global top-of-atmosphere longwave IR variations in the first 100 years of their control runs.

UPDATE #2: 0800 CDT Sept. 12, 2019) After comments from Dr. Frank and a number of commenters here and at WUWT, I have posted Additional Comments on the Frank (2019) Propagation of Error Paper, where I have corrected my mistake of paraphrasing Dr. Frank’s conclusions, when I should have been quoting them verbatim.

I’ve been asked for my opinion by several people about this new published paper by Stanford researcher Dr. Patrick Frank.

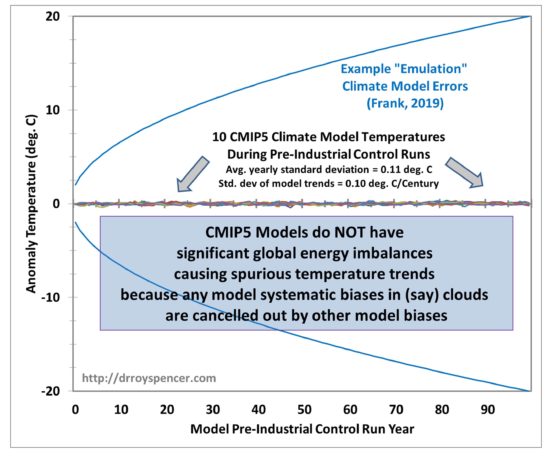

I’ve spent a couple of days reading the paper, and programming his Eq. 1 (a simple “emulation model” of climate model output ), and included his error propagation term (Eq. 6) to make sure I understand his calculations.

Frank has provided the numerous peer reviewers’ comments online, which I have purposely not read in order to provide an independent review. But I mostly agree with his criticism of the peer review process in his recent WUWT post where he describes the paper in simple terms. In my experience, “climate consensus” reviewers sometimes give the most inane and irrelevant objections to a paper if they see that the paper’s conclusion in any way might diminish the Climate Crisis™.

Some reviewers don’t even read the paper, they just look at the conclusions, see who the authors are, and make a decision based upon their preconceptions.

Readers here know I am critical of climate models in the sense they are being used to produce biased results for energy policy and financial reasons, and their fundamental uncertainties have been swept under the rug. What follows is not meant to defend current climate model projections of future global warming; it is meant to show that — as far as I can tell — Dr. Frank’s methodology cannot be used to demonstrate what he thinks he has demonstrated about the errors inherent in climate model projection of future global temperatures.

A Very Brief Summary of What Causes a Global-Average Temperature Change

Before we go any further, you must understand one of the most basic concepts underpinning temperature calculations: With few exceptions, the temperature change in anything, including the climate system, is due to an imbalance between energy gain and energy loss by the system. This is basic 1st Law of Thermodynamics stuff.

So, if energy loss is less than energy gain, warming will occur. In the case of the climate system, the warming in turn results in an increase loss of infrared radiation to outer space. The warming stops once the temperature has risen to the point that the increased loss of infrared (IR) radiation to to outer space (quantified through the Stefan-Boltzmann [S-B] equation) once again achieves global energy balance with absorbed solar energy.

While the specific mechanisms might differ, these energy gain and loss concepts apply similarly to the temperature of a pot of water warming on a stove. Under a constant low flame, the water temperature stabilizes once the rate of energy loss from the water and pot equals the rate of energy gain from the stove.

The climate stabilizing effect from the S-B equation (the so-called “Planck effect”) applies to Earth’s climate system, Mars, Venus, and computerized climate models’ simulations. Just for reference, the average flows of energy into and out of the Earth’s climate system are estimated to be around 235-245 W/m2, but we don’t really know for sure.

What Frank’s Paper Claims

Frank’s paper takes an example known bias in a typical climate model’s longwave (infrared) cloud forcing (LWCF) and assumes that the typical model’s error (+/-4 W/m2) in LWCF can be applied in his emulation model equation, propagating the error forward in time during his emulation model’s integration. The result is a huge (as much as 20 deg. C or more) of resulting spurious model warming (or cooling) in future global average surface air temperature (GASAT).

He claims (I am paraphrasing) that this is evidence that the models are essentially worthless for projecting future temperatures, as long as such large model errors exist. This sounds reasonable to many people. But, as I will explain below, the methodology of using known climate model errors in this fashion is not valid.

First, though, a few comments. On the positive side, the paper is well-written, with extensive examples, and is well-referenced. I wish all “skeptics” papers submitted for publication were as professionally prepared.

He has provided more than enough evidence that the output of the average climate model for GASAT at any given time can be approximated as just an empirical constant times a measure of the accumulated radiative forcing at that time (his Eq. 1). He calls this his “emulation model”, and his result is unsurprising, and even expected. Since global warming in response to increasing CO2 is the result of an imposed energy imbalance (radiative forcing), it makes sense you could approximate the amount of warming a climate model produces as just being proportional to the total radiative forcing over time.

Frank then goes through many published examples of the known bias errors climate models have, particularly for clouds, when compared to satellite measurements. The modelers are well aware of these biases, which can be positive or negative depending upon the model. The errors show that (for example) we do not understand clouds and all of the processes controlling their formation and dissipation from basic first physical principles, otherwise all models would get very nearly the same cloud amounts.

But there are two fundamental problems with Dr. Frank’s methodology.

Climate Models Do NOT Have Substantial Errors in their TOA Net Energy Flux

If any climate model has as large as a 4 W/m2 bias in top-of-atmosphere (TOA) energy flux, it would cause substantial spurious warming or cooling. None of them do.

Why?

Because each of these models are already energy-balanced before they are run with increasing greenhouse gases (GHGs), so they have no inherent bias error to propogate.

For example, the following figure shows 100 year runs of 10 CMIP5 climate models in their pre-industrial control runs. These control runs are made by modelers to make sure that there are no long-term biases in the TOA energy balance that would cause spurious warming or cooling.

https://climexp.knmi.nl/selectfield_cmip5.cgi?id=someone@somewhere .

If what Dr. Frank is claiming was true, the 10 climate models runs in Fig. 1 would show large temperature departures as in the emulation model, with large spurious warming or cooling. But they don’t. You can barely see the yearly temperature deviations, which average about +/-0.11 deg. C across the ten models.

Why don’t the climate models show such behavior?

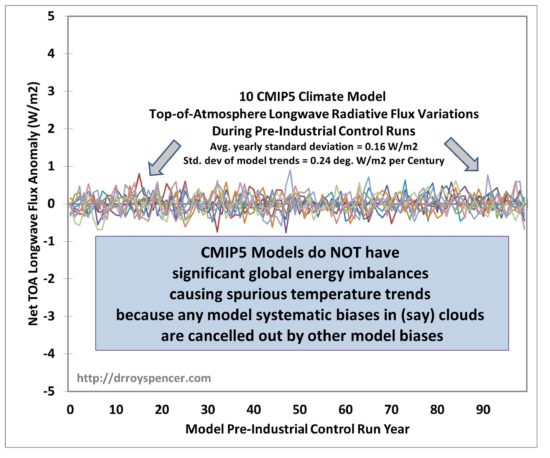

The reason is that the +/-4 W/m2 bias error in LWCF assumed by Dr. Frank is almost exactly cancelled by other biases in the climate models that make up the top-of-atmosphere global radiative balance. To demonstrate this, here are the corresponding TOA net longwave IR fluxes for the same 10 models shown in Fig. 1. Clearly, there is nothing like 4 W/m2 imbalances occurring.

The average yearly standard deviation of the LW flux variations is only 0.16 W/m2, and these vary randomly.

And it doesn’t matter how correlated or uncorrelated those various errors are with each other: they still sum to nearly zero in the long term, which is why the climate model trends in Fig 1 are only +/- 0.10 C/Century… not +/- 20 deg. C/Century. That’s a factor of 200 difference.

This (first) problem with the paper’s methodology is, by itself, enough to conclude the paper’s methodology and resulting conclusions are not valid.

The Error Propagation Model is Not Appropriate for Climate Models

The new (and generally unfamiliar) part of his emulation model is the inclusion of an “error propagation” term (his Eq. 6). After introducing Eq. 6 he states,

“Equation 6 shows that projection uncertainty must increase in every simulation (time) step, as is expected from the impact of a systematic error in the deployed theory“.

While this error propagation model might apply to some issues, there is no way that it applies to a climate model integration over time. If a model actually had a +4 W/m2 imbalance in the TOA energy fluxes, that bias would remain relatively constant over time. It doesn’t somehow accumulate (as the blue curves indicate in Fig. 1) as the square root of the summed squares of the error over time (his Eq. 6).

Another curious aspect of Eq. 6 is that it will produce wildly different results depending upon the length of the assumed time step. Dr. Frank has chosen 1 year as the time step (with a +/-4 W/m2 assumed energy flux error), which will cause a certain amount of error accumulation over 100 years. But if he had chosen a 1 month time step, there would be 12x as many error accumulations and a much larger deduced model error in projected temperature. This should not happen, as the final error should be largely independent of the model time step chosen. Furthermore, the assumed error with a 1 month time step would be even larger than +/-4 W/m2, which would have magnified the final error after a 100 year integrations even more. This makes no physical sense.

I’m sure Dr. Frank is much more expert in the error propagation model than I am. But I am quite sure that Eq. 6 does not represent how a specific bias in a climate model’s energy flux component would change over time. It is one thing to invoke an equation that might well be accurate and appropriate for certain purposes, but that equation is the result of a variety of assumptions, and I am quite sure one or more of those assumptions are not valid in the case of climate model integrations. I hope that a statistician such as Dr. Ross McKitrick will examine this paper, too.

Concluding Comments

There are other, minor, issues I have with the paper. Here I have outlined the two most glaring ones.

Again, I am not defending the current CMIP5 climate model projections of future global temperatures. I believe they produce about twice as much global warming of the atmosphere-ocean system as they should. Furthermore, I don’t believe that they can yet simulate known low-frequency oscillations in the climate system (natural climate change).

But in the context of global warming theory, I believe the largest model errors are the result of a lack of knowledge of the temperature dependent changes in clouds and precipitation efficiency (thus free-tropospheric vapor, thus water vapor “feedback”) that actually occur in response to a long-term forcing of the system from increasing carbon dioxide. I do not believe it is because the fundamental climate modeling framework is not applicable to the climate change issue. The existence of multiple modeling centers from around the world, and then performing multiple experiments with each climate model while making different assumptions, is still the best strategy to get a handle on how much future climate change there *could* be.

My main complaint is that modelers are either deceptive about, or unaware of, the uncertainties in the myriad assumptions — both explicit and implicit — that have gone into those models.

There are many ways that climate models can be faulted. I don’t believe that the current paper represents one of them.

I’d be glad to be proved wrong.

Home/Blog

Home/Blog