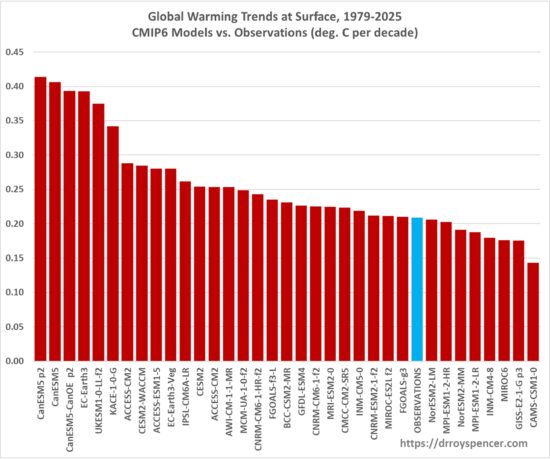

This is just a short update regarding how global surface air temperature (Tsfc) trends are tracking 34 CMIP6 climate models through 2025. The following plot shows the Tsfc trends, 1979-2025, ranked from the warmest to the coolest.

“Observations” is an average of 4 datasets: HadCRUT5, NOAAGlobalTemp Version 6 (now featuring AI, of course), ERA5 (a reanalysis dataset), and the Berkeley 1×1 deg. dataset, which produces a trend identical to HadCRUT5 (+0.205 C/decade).

I consider reanalyses to be in the class of “observations” since they are constrained to match, in some average sense, the measurements made from the surface, weather balloons, global commercial aircraft, satellites, and the kitchen sink.

The observations moved up one place in the rankings since the last time I made one of these plots, mainly due to an anomalously warm 2024.

Home/Blog

Home/Blog

In your view, do CMIP6 models represent a significant improvement over CMIP5 when compared with observations?

No model that uses GHG radiative forcing is a significant improvement.

Indeed!

Sure..basic atmospheric physics must be wrong!

No, basic physics appears to be correct.

Glad to hear that you now understand that CO2 produces a GHE. Because that is what the atmospheric physics demonstrates.

Yeah Nate, F=5.35ln(C/Co). That’s physics.

derived from physics

I hope Stephen is just being facetious. That equation is completely bogus. It’s the opposite of reality.

Nate is going to explain how it was derived.

How did you select these 34 CMIP6 climate models? Are they using the same GHG concentrations history that we had?

Another comparison:

https://www.realclimate.org/index.php/climate-model-projections-compared-to-observations/

Are you saying that Roy is wrong?

The RealClimate link undoubtedly provides a more comprehensive perspective of climate model vs observation comparison. But one doesn’t have to chose one or the other, understanding “both” perspectives is always an option.

In science we are skeptical and can be critical of anyone’s analysis.

i am skeptical of YOUR science.

“RLH says: i am skeptical of YOUR science.”

One of the hallmarks of intellectual honesty is to apply the same rigorous standards to one’s own beliefs as one does to others’ claims.

This is where most self-identified skeptics go wrong.

To be fair, introspection is very difficult.

“One of the hallmarks of intellectual honesty is to apply the same rigorous standards to one’s own beliefs as one does to others’ claims.”

Yes Mark, and you avoided doing that: “This is where most self-identified skeptics go wrong.”

You conveniently forgot to mention “Warmists”. You know, those people that believe ice cubes can boil water….

To be fair, introspection is very difficult.

Nate

” In science we are skeptical and can be critical of anyone’s analysis. ”

True!

But… regardless of how true that is, for people like ‘RLH’ it only applies if data processing and/or analysis shows signs of cooling.

That’s the reason why I call him ‘Blindsley H00d’: he keeps since ever blind on the same eye.

RLH i.e. Blindsley H00d

” i am skeptical of YOUR science. ”

To be skeptical of any science means to be able to technically and / or scientifically contradict its results.

That is exactly the contrary of what you do.

Like Robertson and all the pseudoskeptics infesting this blog, all you are able to do is to claim things without any real, consistent proof.

The best example is you claim five years ago that (TMIN+TMAX)/2 would lead to wrong daily temperature averages when compared to the true average of hourly data.

I disproved your claim using three temperature data sets: from the German Weather Office, from the METEOSTAT source and finally from the USRCN station data.

All you were able to respond was to polemically discredit my results – once more without any technical proof.

According to Berkeley Earth, NOAA has not been able to report a massive number of stations from around the world since August, 2025. As a non-scientist and just an interested person in these matters, I continue to be amazed at the poor quality of our current data (inferior siting of stations in the U.S. for example.) The developed nations have been spending massive amounts of money on CO2 abatement, research, etc and we can’t produce reliable temperature data? Apparently, the NOAA issue is a botched computer conversion (I think software). If NOAA doesn’t care enough to treat that as a high priority, why should I care about warming?

Then there is the older temperature data going back to, say, 1875 and forward. John Christy wrote a book entitled “Is It Getting Hotter in Fresno…Or Not? His research makes it clear that the older temperature records, at least in Fresno, are quite dicey.

Then there is the land temperature record outside the USA since 1875. Vast areas that are underpopulated, subsistence societies, industrialized nations ravaged by two world wars, many colonies of western advanced that lost that expertise (technology and interest in science) when colonialism ended.

No, I really am coming to the conclusion that our “vision” of temperature trends is quite limited. I am quite skeptical that scientists can take sparse and often bad data and somehow magically produce something that approximates reality. I do trust the satellite data, but that takes us back only to 1979.

And that is 29% of the earth’s surface.

“Apparently, the NOAA issue is a botched computer conversion (I think software). If NOAA doesn’t care enough to treat that as a high priority, why should I care about warming?”

See:

https://www.ncei.noaa.gov/access/crn/

“The United States Climate Reference Network (USCRN) is a sustained network of high-quality weather monitoring stations across the contiguous U.S., Alaska, and Hawaii. These stations use resilient instrumentation to measure temperature, precipitation, wind speed, soil conditions, and more.”

My quote should also include this statement:

“The developed nations have been spending massive amounts of money on CO2 abatement, research, etc and we can’t produce reliable temperature data?”

In response to the concern that:

“As a non-scientist and just an interested person in these matters, I continue to be amazed at the poor quality of our current data (inferior siting of stations in the U.S. for example.)”

This is true for the raw U.S. station network, but it is not ignored. Scientists are well aware of these problems and attempt to correct for these using bias adjustment algorithms.

One way to test whether those adjustments are reasonable is to compare the official adjustment against the pristine reference network linked above.

https://moyhu.blogspot.com/2020/03/usa-temperatures-comparison-of-moyhu.html

See: (go to the end of the post)

https://berkeleyearth.org/october-2025-temperature-update/

This is the correct link for my 7:39 PM comment.

https://agupubs.onlinelibrary.wiley.com/doi/full/10.1002/2015GL067640

Thomas Hagedorn

Your Berkeley Earth link explicitly states that station coverage remains sufficient to constrain the global average temperature with low uncertainty.

Unless you are attempting to analyze regional temperature trends in areas such as Kazakhstan or parts of Africa, it is unclear how this issue undermines the global surface temperature record.

So, at the outset of my original post I noted that I am not a scientist. I do have some very dated training and experience in atmospheric research. Global warming, now, rebranded as climate change and covid messaging and public policy have made me lose a lot of confidence in the objectivity of science. So, I approach these matters from a critical (my favorite description of my position) or skeptical viewpoint. I saw Berkeley Earth’s comment that you referenced. I also note that they get funding from an American oligarch, Bill Gates. More than a little pressure and incentive to convince themselves and others that their methodology works, even when you lose that many data points. (Look at the global map on that link. The loss is massive.)

And I continue to be amazed at the poor quality of U.S. weather station siting and at NOAA blowing a computer conversion for such important data. And this is supposedly an existential, all-hands-on-deck crisis. MOVE THE DARN STATIONS. USE METHODOLOGY IN PLACE SINCE THE 70s FOR A COMPUTER CONVERSION. I guess I shouldn’t be surprised. I have heard a number of examples of antiquated technology and inertia in government…IRS, air traffic control, social security, treasury department. It is like it is still 1980.

I have read many of the skeptical/critical books on climate change and that is why I have this skeptical view. They just seem to make a better case.

“Apparently, the NOAA issue is a botched computer conversion (I think software).”

Evidence?

You are very tedious, sir. I didn’t make that up. I dug in a little and tried to find out the cause. Use AI for a little and you’ll get more background on it. Berkeley Earth is reporting about it. They use some other data sources, but they show a map of NOAA’s global reporting network sites and large parts of the globe (outside US) are dark (if it still is uncorrected).

Still, it would be nice to know the source of this information. And be able to look at it.

Is that too much to ask?

The article that I have linked below has a first section that has some very insightful and wise observations about data and its use. Even though its author is a finance professor who focuses on investment valuations and decisions, the first section applies to anyone trying to use data in a sophisticated way. I see many applications of his observations to climate science. Just ignore the rest of his blog post after the opening section on data. The author -Dasmodaran- is quite experienced and respected in his field:

https://aswathdamodaran.blogspot.com/2026/01/data-update-1-for-2026-push-and-pull-of.html

Good statistics is good.

But unless you can show us Climate Science specifically NOT doing this, I don’t see the relevance here.

1. Nate’s question for evidence about:

” Apparently, the NOAA issue is a botched computer conversion (I think software). ”

2. Hagedorn boy’s riddiculous reply:

” You are very tedious, sir. I didn’t make that up. I dug in a little and tried to find out the cause. ”

*

No one told that you ‘made that up’, Hagedorn boy. However, you clearly wrote it.

Hence, you merely show that you were unable to prove evidence of your polemical, superficial claim – as was asked by Nate.

*

Too many people think, guess and discredit here, by the way. Too few look at data and compare their processing. After all, the data is available to all of us…

*

Let’s do what Hagedorn never would be able to do: a comparison of three global, land-only anomaly time series from Jan 1900 till Dec 2024:

– 1. NOAA Climate at a Glance (wrt 1901-2000)

– 2. Berkeley Earth (wrt 1951-1980)

– 3. my layman’s evaluation based on the raw GHCN daily data (wrt 1991-2020)

https://drive.google.com/file/d/1b7Ag4ED7C8zPcgDJ4GPaURKKYlSpk1b_/view

and in addition, of

– 4. UAH 6.1 LT (wrt 1991-2020)

https://drive.google.com/file/d/1t8KzmaddoZfb6tFs1ZqK3eJq3rUPWdLz/view

All anomalies were of course displaced by their time series’ mean for the common period (1991-2020).

*

The graphs couldn’t show better that though having the comparatively highest trend (in °C / decade) for the period since 2000

NOAA: 0.42 +- 0.03

Berk: 0.33 +- 0.03

Bin: 0.32 +- 0.02

UAH: 0.31 +- 0.02

NOAA’s data can by no means be discredited as being based on bad sited stations.

The difference between the surface time series is much more due to

– the anomaly construction method chosen

and

– presence or absence of interpolation (I never use this technique).

*

Yes: NOAA faces since years and years critique on their ‘Pairwise Homogenising Algorithm’ designed long time ago by Menne & al.

*

All data sources will be added as soon as possible.

Sources for the land-only series

NOAA

https://www.ncei.noaa.gov/access/monitoring/climate-at-a-glance/global/time-series/globe/land/tavg/1/0/1900-2025

Berkeley Earth

https://berkeley-earth-temperature.s3.us-west-1.amazonaws.com/Global/Complete_TAVG_complete.txt

UAH 6.1 LT

https://vortex.nsstc.uah.edu/data/msu/v6.1/tlt/uahncdc_lt_6.1.txt

(data column 2)

Bin’s eval of GHCN daily

https://www.ncei.noaa.gov/pub/data/ghcn/daily/

*

GHCN daily provides for data from about 130,000 stations, over 40,000 of them come with temperature data.

Half of them is in the US.

Thus it makes abolutely no sense to generate time series by averaging at station level, as then the Globe looks like kinda US backyard.

To avoid this, all local station anomalies are first stored in grid cells (of the same 2.5 degree size as UAH’s); the grid cells then are averaged for each latitude band and then finally latitude weighted.

Of the 40,000+ stations, 26,273 were selected; 14,326 of these are in the US, giving 11,947 outside.

Not all of them were present between 1900 and 2025, of course; in 2020, for example, 12,866 stations located in 1856 cells (60% of Earth’s land) were active.

With the area weighting performed using the grid cell storage, instead of having 46% non-US stations competing with 54% US, one has for example in 2020 1686 cells outside the US competing with 170 inside, the latter forming about 9% of the total.