The increasing global ocean heat content (OHC) is often pointed to as the most quantitative way to monitor long-term changes in the global energy balance, which is believed to have been altered by anthropogenic greenhouse gas emissions. The challenge is that long-term temperature changes in the ocean below the top hundred meters or so become exceedingly small and difficult to measure. The newer network of Argo floats since the early 2000s has improved global coverage dramatically.

A new Cheng et al. (2020) paper describing record warm ocean temperatures in 2019 has been discussed by Willis Eschenbach who correctly reminds us that such “record setting” changes in the 0-2000 m ocean heat content (reported in Zettajoules, which is 10^^21 Joules) amount to exceedingly small temperature changes. I calculate from their data that 2019 was only 0.004 0.009 deg. C warmer than 2018.

Over the years I have frequently pointed out that the global energy imbalance (less than 1 W/m2) corresponding to such small rates of warming is much smaller than the accuracy with which we know the natural energy flows (1 part in 300 or so), which means Mother Nature could be responsible for the warming and we wouldn’t even know it.

The Cheng (2017) dataset of 0-2000m ocean heat content changes extends the OHC record back to 1940 (with little global coverage) and now up through 2019. The methodology of that dataset uses optimum interpolation techniques to intelligently extend the geographic coverage of limited data. I’m not going to critique that methodology here, and I agree with those who argue creating data where it does not exist is not the same as having real data. Instead I want to answer the question:

If we take the 1940-2019 global OHC data (as well as observed sea surface temperature data) at face value, and assume all of the warming trend was human-caused, what does it imply regarding equilibrium climate sensitivity (ECS)?

Let’s assume ALL of the warming of the deep oceans since 1940 has been human-caused, and that the Cheng dataset accurately captures that. Furthermore, let’s assume that the HadSST sea surface temperature dataset covering the same period of time is also accurate, and that the RCP radiative forcing scenario used by the CMIP5 climate models also represents reality.

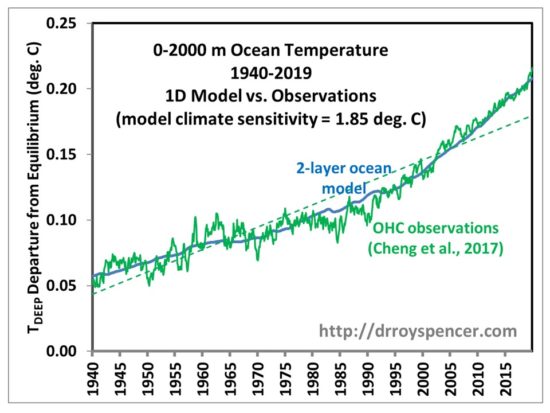

I updated my 1D model of ocean temperature with the Cheng data so that I could match its warming trend over the 80-year period 1940-2019. That model also includes El Nino and La Nina (ENSO) variability to capture year-to-year temperature changes. The resulting fit I get with an assumed equilibrium climate sensitivity of 1.85 deg. C is shown in the following figure.

Thus, based upon basic energy budget considerations in a 2-layer ocean model, we can explain the IPCC-sanctioned global temperature datasets with a climate sensitivity of only 1.85 deg. C. And even that assumes that ALL of the warming is due to humans which, as I mentioned before, is not known since the global energy imbalance involved is much smaller than the accuracy with which we know natural energy flows.

If I turn off the ENSO forcing I have in the model, then after readjusting the model free parameters to once again match the observed temperature trends, I get about 1.7 deg. C climate ECS. In that case, there are only 3 model adjustable parameters (ECS, the ocean top layer thickness [18 m], and the assumed rate or energy exchange between the top layer and the rest of the 0-2000m layer, [2.1 W/m2 per deg C difference in layer temperatures away from energy equilibrium]). Otherwise, there are 7 model adjustable parameters in the model with ENSO effects turned on.

For those who claim my model is akin to John von Neumann’s famous claim that with 5 variables he can fit an elephant and make its trunk wiggle, I should point out that none of the model’s adjustable parameters (mostly scaling factors) vary in time. They apply equally to each monthly time step from 1765 through 2019. The long-term behavior of the model in terms of trends is mainly governed by (1) the assumed radiative forcing history (RCP6), (2) the assumed rate of heat storage (or extraction) in the deep ocean as the surface warms (or cools), and (3) the assumed climate sensitivity, all within an energy budget model with physical units.

My conclusion is that the observed trends in both surface and deep-layer temperature in the global oceans correspond to low climate sensitivity, only about 50% of what IPCC climate models produce. This is the same conclusion as Lewis & Curry made using similar energy budget considerations, but applied to two different averaging periods about 100 years apart rather than (as I have done) in a time-dependent forcing-feedback model.

Home/Blog

Home/Blog

Sea ice extent as of January 15 was 13.92 million Km².