As continual fiddling with the global surface thermometer data leads to an ever-warmer present and an ever-cooler past, many of us are increasingly skeptical that beating a previous “warmest” year by hundredths of a degree has any real-world meaning. Yet, the current UN climate meeting in Lima, Peru, is setting the stage for some very real changes in energy policy that will inevitably make energy more expensive for everyone, no matter their economic status.

But there are some very good reasons to be skeptical of the claim that 2014 will be the “hottest year ever”…at the very least from the standpoint of it having any real impact on peoples’ lives.

No One Has Ever Felt “Global Warming”

If you turn up your thermostat by 1 deg. F, you might feel slightly warmer in the few minutes it takes for the warming to occur. But no one has felt the 1 deg. F rise in global average temperature in the last 50 to 100 years. It is too small to notice, when we are routinely experiencing day-night, day-to-day, and seasonal swings of tens of degrees.

The Urban Heat Island Effect Has Hopelessly Corrupted the Land Thermometer Data

Most thermometers measure temperature where people live, and people tend to build stuff that warms the local environment around the thermometer.

Called the urban heat island (UHI) effect, most of the warming occurs long before the thermometer site actually becomes “urban”. For instance, if you compare neighboring thermometers around the world, and also compare their population densities (as a rough indication of UHI influence), it can be easily demonstrated that substantial average UHI warming occurs even at low population densities, about ~1 deg. F at only 10 persons per sq. km!

This effect, which has been studied and published for many decades, has not been adequately addressed in the global temperature datasets, partly because there is no good way to apply it to individual thermometer sites.

2014 Won’t Be Statistically Different from 2010

For a “record” temperature to be statistically significant, it has to rise above its level of measurement error, of which there are many for thermometers: relating to changes in location, instrumentation, measurement times of day, inadequate coverage of the Earth, etc. Oh…and that pesky urban heat island effect.

A couple hundredths of a degree warmer than a previous year (which 2014 will likely be) should be considered a “tie”, not a record.

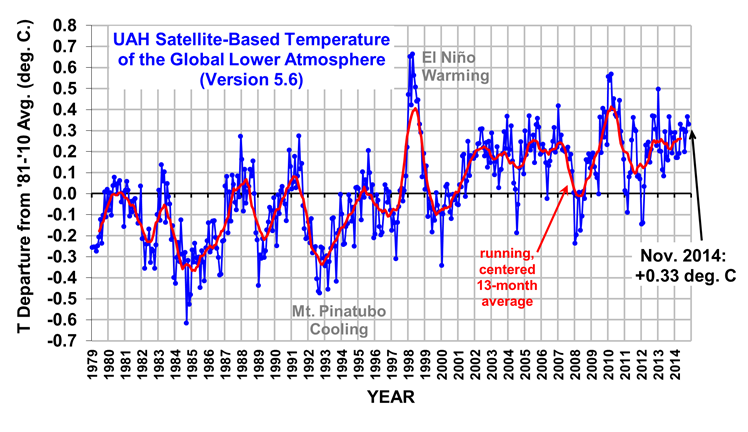

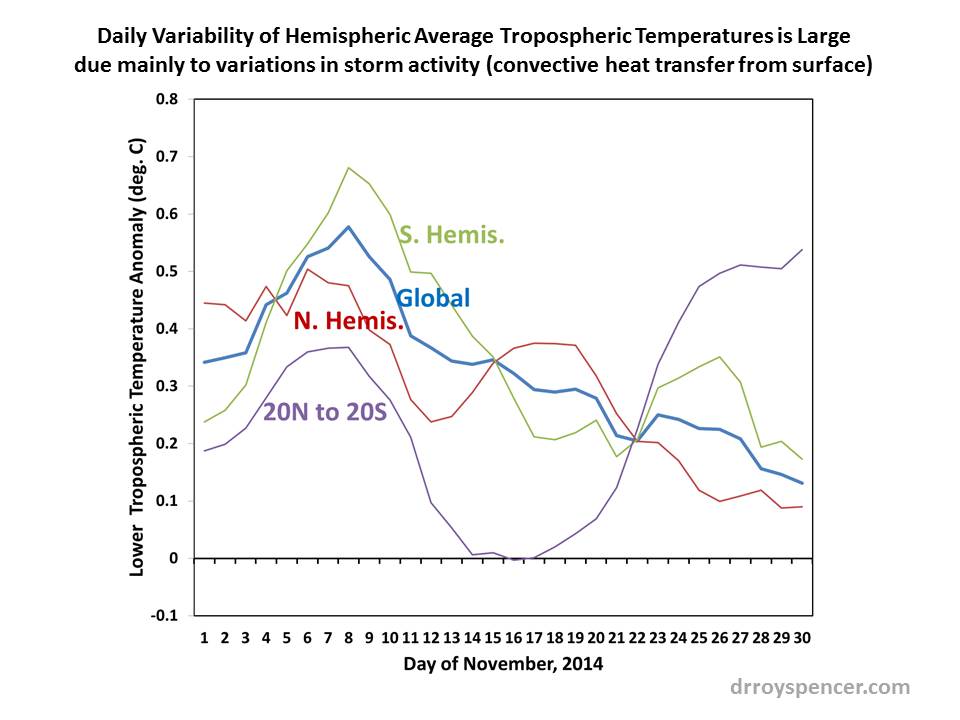

Our Best Technology, Satellites, Say 2014 Will Not be the Warmest

Our satellite estimates of global temperature, which have much more complete geographic coverage than thermometers, reveal that 2014 won’t be even close to a record warm year.

In fact, the satellite and thermometer technologies seem to be diverging in what they are telling us in recent years, with the thermometers continuing to warm, and the satellite temperatures essentially flat-lining.

So, why have world governments chosen to rely on surface thermometers, which were never designed for high accuracy, and yet ignore their own high-tech satellite network of calibrated sensors, especially when the satellites also agree with weather balloon data?

I will leave it to the reader to answer that one.

Home/Blog

Home/Blog